Bill Easterly is speaking in our seminar this Thursday–the title is “Free the Poor! Ending Global Poverty from the Bottom Up,” and it will be in 711 IAB from 11-12:30 (it’s open to all)–and I thought I’d prepare by reading his recent article in the New York Review of Books, a review of “The Bottom Billion: Why the Poorest Countries Are Failing and What Can Be Done About It,” by Paul Collier. (I haven’t read the Collier book so that puts me on an even footing with most of the others in the audience, I think.)

Easterly has some pretty strong criticism of Collier, setting him up with some quotes that set of alarms to me as a statistician. For example, Collier writes:

Aid is not very effective in inducing a turnaround in a failing state; you have to wait for a political opportunity. When it arises, pour in the technical assistance as quickly as possible to help implement reform. Then, after a few years, start pouring in the money for the government to spend.

and

Security in postconflict societies will normally require an external military presence for a long time. Both sending and recipient governments should expect this presence to last for around a decade, and must commit to it. Much less than a decade and domestic politicians are liable to play a waiting game rather than building the peace…. Much more than a decade and citizens are likely to get restive for foreign troops to leave the country.

These are the kind of precise recommendations that make me suspicious. I mean, sure, I know that real effects are nonlinear and even nonmonotonic–too much of anything is too much, and all that–but I’m just about always skeptical of the claim that this sort of “sweet spot” analysis can really be pulled out from data. (See here–search on “Shepherd”–for an example of a nonlinear finding I didn’t believe, in that case on the deterrent effect of the death penalty.)

Easterly continues with some more general discussion of the role of statistics in making policy decisions. Here’s Easterly:

Alas, as a social scientist using methods similar to Collier’s in my research, I [Easterly] am painfully aware of the limitations of our science. When recommending an action on the basis of a statistical correlation, first of all, one must heed the well-known principle that correlation does not equal causation. . . . [Collier] fails to establish that the measures he recommends will lead to the desired outcomes. In fairness to Collier, it is very difficult to demonstrate causal effects with the kind of data we have available to us on civil wars and failing states. . . .

Of course, governments take many actions even when social scientists are unable to establish that such actions will cause certain desirable outcomes. Presumably they use some kind of political judgment that is not based on statistical analysis.

I’m not so sure about this. Bill James once said something along the lines of: The alternative to “good statistics” is not “no statistics,” it’s “bad statistics.” (His example was something like somebody who critizicized On Base Percentage because it counted a walk the same as a hit, but then said critic ended up relying on Batting Average, which has lots more serious problems.)

Similarly, if we don’t have a fully credible causal inference, I’d still like to see some serious observational analysis.

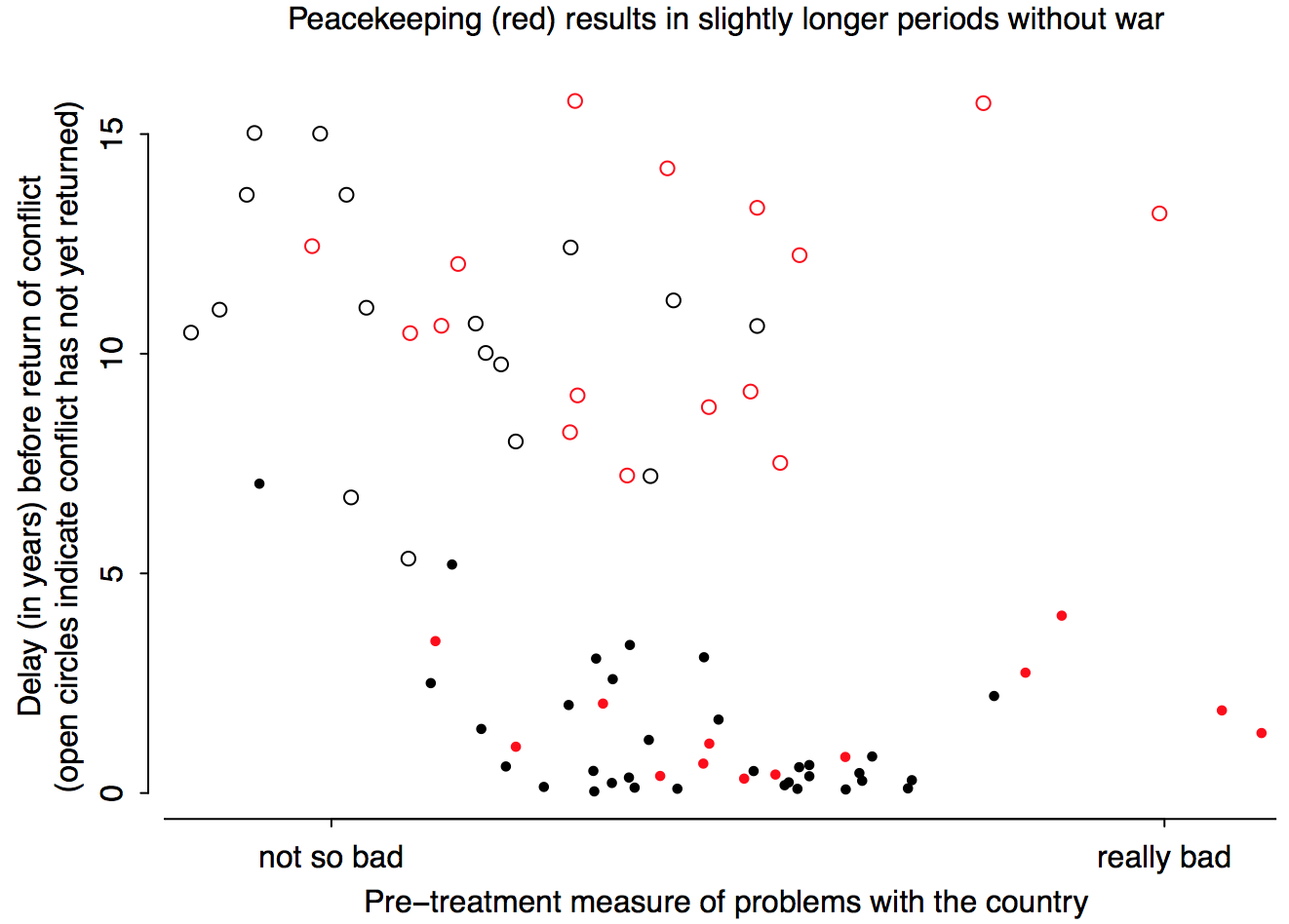

An example of this is Page Fortna’s work on the effectiveness of peacekeeping. I might be garbling the details here, but my recollection is that she compared outcomes in countries with civil war, comparing countries with and without international peacekeeping. The countries with peacekeeping did better. A potential objection arose, which was that perhaps peacekeepers chose the easy cases–maybe the really bad civil wars were so dangerous that peacekeepers didn’t go to those places. So she controlled for how bad off the country before the peacekeeping-or-no-peacekeeping decision was made, using some objective measures of badness–and she found that peackeeping was actually more likely to be done in the tough cases, and after controlling for how bad off the country was, peacekeeping looked even more effective. Here’s the graph (which I made from Page’s data when she spoke in our seminar a few years ago):

[Graph updated; previous version had the labeling all garbled.]

The red circles and points are countries with peacekeeping, the black circles and points are countries without, and the y-axis represents how long the countries have gone so far without a return of the conflict. On average, the red points on the graph are higher than the black points, after controlling for x—that is, countries with peacekeeping seem to do better, on average (but there’s lots of variation). In an example like this, the real research effort comes from putting together the dataset; that said, I think this graph is helpful, and it also illustrates the middle range between anecdotes of individual cases, on one hand, and ironclad causal inference on the other. I have no idea where Collier’s research fits in here but I’d like to keep a place in policy analysis for this sort of thing. I’d hate to send the message that, if all we have is correlation, that the observational statistics must be completely ignored.

P.S. Easterly also writes, “To a social scientist, the world is a big laboratory.” I would alter this to “observatory.” To me, a laboratory evokes images of test tubes and scientific experiments, whereas for me (and, I think, for most quantitative social scientists), the world is something that we gather data on and learn about rather than directly manipulate. Laboratory and field experiments are increasingly popular, sure, but I still don’t see them as prototypical social science.

P.P.S. See here for some comments from Chris Blattman, who knows more about this stuff than I do.

"Similarly, if we don't have a fully credible causal inference, I'd still like to see some serious observational analysis."

I think this point is _very_ important but mostly overlooked nowadays. Social scientists do whatever it takes to make a case for causal inferences, even when it is clear not possible.

I have the impression this is particular true for applied economists and their search for instruments: let's think about the work of considered important applied guys, such as the author of freakconomics.

I would like see more serious quantitative observational studies in which statistical analysis is smartly employed but causal claims cannot be made. Any suggestions?

You might like my recent series of posts pointing out that we directly manipulate the world through judge, legislator, and adminstrator made law. I think this should be seen for what it is: experimental sociology/economics (rather than simply justice or retribution). I also advocate taking an experimentalist approach to rule and law making going forward, working with experimental social scientists to design experiments with controls and collect data, to determine best policies in important areas where there seems to be resolvable uncertainty.

http://www.hopeanon.typepad.com

Amen. When I hear people saying things like "regression is evil" and "no one wants to do regression any more" (both verbatim quotes by smart, technically-savvy political scientists) I feel like I'm watching the triplets floating down the gutter with the bathwater. Perhaps we can amend your paraphrase of James: the alternative to "bad regression" isn't "no regression," it's "good regression."

I think people say "regression is evil" because many questions in the social sciences are too complicated to be answered by regression analysis (or any type of data analysis). When a system has thousands of inputs and outputs it's often possible to find data to support any thesis imaginable.

Is the "casual" in the title a casual typo or can we assume a conscious cause for it?

John: Sure, but, again, what's the alternative? I think a serious quantitative analysis can still make a useful contribution.

Nick: Typo fixed.

But this alternative analyses are _very_ rare anyway, right? How many studies the authors openly recognize there is no way to perform a causal analysis but then they proceed with a careful quantitative analysis, for instance interpreting regression coefficients predictively?

"I think this point is _very_ important but mostly overlooked nowadays. Social scientists do whatever it takes to make a case for causal inferences, even when it is clear not possible."

I agree with Antonio on this, scientist should firstly clear casual inferences before taking any actions.I think it's the most important thing for them to do.Also I don't think that it's too hard to research before taking action nowadays; if you do not agree you should only think about the scientists 200 years ago which hadn't had any tool to do any thing. I hope someday poverty go away from our planet

-Peace

"I think people say "regression is evil" because many questions in the social sciences are too complicated to be answered by regression analysis (or any type of data analysis)."

While I am almost certain there are people who say that for that reason, those aren't the people I'm talking about (or even to). I'm referring to the "identification Taliban" types.