I came across a document [updated link here], “Applying structured analogies to the global warming alarm movement,” by Kesten Green and Scott Armstrong. The general approach is appealing to me, but the execution seemed disturbingly flawed.

Here’s how they introduce the project:

The structured analogies procedure we [Green and Armstrong] used for this study was as follows:

1. Identify possible analogies by searching the literature and by asking experts with different viewpoints to nominate analogies to the target situation: alarm over dangerous manmade global warming.

2. Screen the possible analogies to ensure they meet the stated criteria and that the outcomes are known.

3. Code the relevant characteristics of the analogous situations.

4. Forecast target situation outcomes by using a predetermined mechanical rule to select the outcomes of the analogies.

Here is how we posed the question to the experts:The Intergovernmental Panel on Climate Change and other organizations and individuals have warned that unless manmade emissions of carbon dioxide are reduced substantially, temperatures will increase and people and the natural world will suffer serious harm. Some people believe it is already too late to avoid some of that harm.

Have there been other situations that involved widespread alarm over predictions of serious harm that could only be averted at considerable cost? We are particularly interested in alarms endorsed by experts and accepted as serious by relevant authorities.

We screened the proposed analogies to find those for which the outcomes were known and that met the criteria of similarity to the global warming alarm. Our criteria for similarity were that the situations must have involved alarms that were:

1. based on forecasts of material human catastrophe arising from effects of human activity on the physical environment,

2. endorsed by scientists, politicians, and media, and

3. accompanied by calls for strong action

This all looks good to me. But then I looked their list of 26 analogies to the alarm over dangerous manmade global warming. There were two items on the list that I knew something about: “Electrical wiring and cancer, etc. (1979)” and “Radon in homes and lung cancer (1985).” My experience about the power lines example comes from having attended a conference on the topic in the late 1980s, having read some of the literature at the time, and then vaguely keeping up with developments since then. My experience of home radon comes from a large project that Phil Price and I did in the 1990s; it’s an example that we wrote up in various places, including my two textbooks.

To calibrate what Green and Armstrong were doing, I looked at what they said about these two cases.

To start with, it seemed iffy to me to consider these cases as comparable to the alarm over global warming, in that each of those examples (power lines and radon) are clearly limited in their scope. Power lines were at one point believed to raise the level of childhood leukemia for a small subset of the population, and radon was (and still is, to my knowledge) believed to cause several thousand excess cancers each year in the United States. Several thousand early cancer deaths ain’t nothing, but in both cases the risks are clearly bounded, as compared to alarms over global warming melting icecaps, flooding Bangladesh, destroying Miami, etc. So to me it trivializes the climate change risk (or, alternatively, the extent of climate change alarmism) to link it to bounded risks like power lines and radon.

On the other hand, I’ve long held that we can learn about small probabilities by extrapolating from precursor data, so, as long as this extrapolative nature of the argument is formally part of the model, maybe something useful can be learned here. I’m open to the idea that historical analysis of the perceptions of small, bounded risks, could be relevant to our understanding of the perception of a potentially large, unbounded risk.

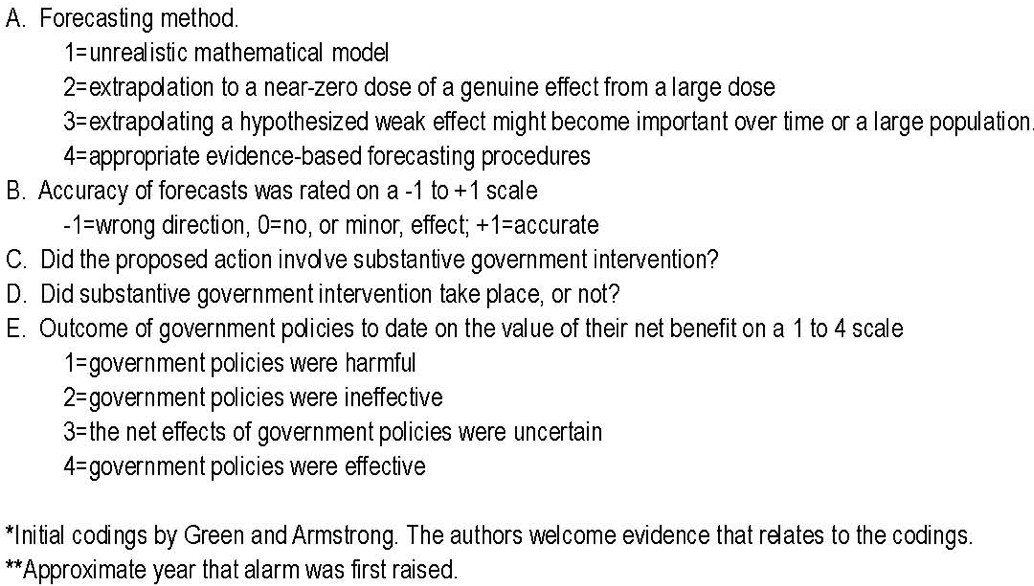

So now on to the details. Here’s their coding system:

And here’s how they code the two risks that I know something about:

![]()

![]()

I dispute a lot of these codings. In particular, my impression is that the consensus is that electrical wiring has no effect on cancer. So that should be coded as a 0, not a -1. They also code that there was “substantial government intervention” in the area. I think that “substantial” would be overstating things here, but I guess the real point is that “substantial” needs to be defined more clearly. I’ll accept that, to the extent there was any intervention, it was retrospectively harmful in that it was combating a non-risk.

Now on to the radon example. They code the accuracy of forecasts as a -1. Huh? Are they claiming that home radon exposure actually saves lives? Sure, I know that some people make that claim, but my impression is that it’s a minority view and hardly a reasonable summary of expert consensus here. They next write that the proposed action involved “substantial government intervention.” I don’t think this is right at all. The EPA recommends that homeowners test their houses for radon and to remediate if the measurement exceeds a specified level. We have argued that these recommendations are far from optimal, but I’d hardly call such recommendations to be “substantial government intervention.” The next claim is that these policies (recommending measurement and intervention) where “harmful.” I don’t see such clarity here. As we discussed in our research paper, it’s a tradeoff between dollars and lives. You can make the claim that the tradeoff was, in aggregate, too expensive and thus the policies were harmful, but this hardly seems clear to me. I’d guess that most observers would consider the policies to be effective in that they saved lives at a reasonable cost. (See Table 5 of our paper for some estimates for cost per lives saved under various decision rules and various assumptions about risk.)

P.S. The latest date on any of these documents is 31 Mar 2011, so perhaps the authors have given up on the project. Which seems too bad, but given what they had so far, I think if they wanted to continue, they’d need to scrap it and start all over. I’ve had various research ideas that I ended up quitting on because they just didn’t work; this could be such an idea of theirs.

The outdated nature of all this made me wonder if I should post on it at all. But I think the general issues are interesting enough that it was worth a look.

Thanks for commenting on this. Armstrong certainly has a justified reputation in forecasting – I’m about 18 inches from his “Principles of Forecasting” as I sit here at my desk. He also has a good website on the Delphi technique, which I’ve used in the forecasting classes I teach as an adjunct to show this method.

But I’ve also been using this article both to illustrate how you would use analogies to forecast and the dangers of using analogies to forecast. There seemed to be political bias in this (remember Armstrong’s offer to bet with Al Gore?), and it seemed clear to me that the selection of analogies and their method of presentation could be a source of bias.

For example, consider the treatment of Rachel Carson’s Silent Spring. Green and Armstrong focus entirely on the banning of DDT, and they are likely correct that this complete ban was overdone (this is not my area of expertise). But, from my perspective, Carson’s book had a much larger positive effect on consciousness which has led to many positive effects.

The screenshot of the coding system is more or less unreadable.

I uploaded a clearer image.

Much clearer indeed, cheers.

Here is some data:

1) DeSmogBlog profiles:

Scott Armstrong.

Read list of relevant publications.

Kesten Green

“As have other environmental alarms before it, the dangerous man-made global warming alarm will fade. Unfortunately, policies that make most people worse off have and will be implemented. We would be better off if we didn’t keep repeating the same old mistakes.”

WIllioe Soon, their (asyrophysicist) coauthor of paper pooh-poohing any threat to polar bears.

2) Gavin Schmidt (NASA GISS climate scientist) commentary on one of the their papers.

Their paper applied “scientific forecasting” to climate modeling and found it very deficient. Gavin:

“G+A’s recent foray into climate science might therefore be a good case study for why their principles have not won wide acceptance. In the spirit of their technique, we’ll use a scientific methodology – let’s call it ‘the principles of cross-disciplinary acceptance’ (TM pending). For each principle, we assign a numerical score between -2 and 2, and the average will be our ‘scientific’ conclusion…

Principle 1: When moving into a new field, don’t assume you know everything about it because you read a review and none of the primary literature.

Score: -2

G+A appear to have only read one chapter of the IPCC report (Chap 8), and an un-peer reviewed hatchet job on the Stern report. Not a very good start…

Principle 2: Talk to people who are doing what you are concerned about.

Score: -2

Of the roughly 20 climate modelling groups in the world, and hundreds of associated researchers, G+A appear to have talked to none of them. Strike 2.”

….more

Basically, they believe their “scientific forecast” overrides physics like conservation of energy, greenhouse effect.

3) See pp.111-112 of Strange Scholarship in the Wegman Report.

Armstrong is well-glued in with Willie Soon, Fred Singer, Heartland Institute, CATO, Energy and Environment,etc climate anti-science.

Conclusion:

I’d suggest that the goal of this was to conclude that any conccern over global climate change was unwarranted.

John:

Perhaps a good analogy would be the scientists from the 1930s and 1940s who were members of the Communist Party. Some of them did great science but their judgment seemed to evaporate when they came too close to sensitive topics.

Yes, red flags in either case… (in this case, from long history on Armstrong):

a) Coauthor with Willie Soon.

b) A bunch of publications in Energy & Environment

c) Heartland expert on Global Warming

d) Involved in Heartland/Fred Singer’s NIPCC report

Any one of those = ~negative infinity on credibility scale for anyone familiar with this turf.

Again, his “scientific forecasting” nullifies modern physics, which is good enough for the targeted audience.

It looks like they talk about “substantive” intervention, not “substantial intervention.”

I don’t know anything about the electric fields example but they definitely have the radon example wrong. There has been some “substantive” government intervention — I think all military housing is tested for radon, and in a lot of states schools (and sometimes other public buildings) are tested and mitigated. And there are government-run informational campaigns (who can forget that January is National Radon Action Month?). Taken together it doesn’t amount to much, but it’s not nothing.

For radon, the coding should be

2 : extrapolation to low dose of a genuine effect of low dose.

(one could argue for both 3 and 4 as well but 2 is probably the single best choice).

1 : accuracy of the forecasts is: accurate

(one could argue for 0 and I’m not sure we’ll ever really know, but the best case-control studies find increased lung cancer at radon exposure at around the government’s recommended “action level”, so 1 is probably the best choice here).

1 : did the proposed action involve substantive government intervention?

(depends on what you mean by substantive.)

1 : did substantive intervention take place?

(there hasn’t been as much intervention as many radon scientists recommended, and again this depends on what one means by “substantive”, but, as discussed above, there has been some).

3 : the net effect of government policies is uncertain.

(Arguably, 4 could be a better answer. Recommendations to test for, and mitigate, high radon exposures has surely saved lives, but whether it has saved enough lives to have been worth the costs and stress etc. is hard to assess.)

So, rather than the paper’s coding of

2, -1, 1, 1, 1

the radon example should be

2, 1, 1, 1, 3

or maybe

4, 1, 1, 1, 4

The switches from -1 to 1 on whether the effect is in the right direction, and from government efforts being harmful to uncertain or beneficial, seem pretty important!

It is interesting that for all of the analogies the accuracy of the forecast (column B) was rated as -1 (wrong direction) or 0 (no or minor effect) and none were +1 (accurate). That seems to me to be biasing the study somewhat!

The big problem (apart from the obvious bias in selection and coding – excluding vaccinations is a big omission) is that analogies work best when they are opportunistic, ad hoc, and abandoned as quickly as they are adopted. Analogies, if used generatively (i.e. to come up with new ideas), can be incredibly powerful. But when used exegeticaly (i.e. to interpret or summarize other people’s ideas), they can be very harmful. I’ve given some examples here:

http://metaphorhacker.net/2013/10/pervasiveness-of-oblidging-metaphors-in-thought-and-deed

The big problem is that in our cognition, ‘x is y’ and ‘x is like y’ are often treated very similarly. But the fact is that x is never y. So every analogy has to be judged on its own merit and we need to carefully examine why we’re using the analogy and at every step consider its limits. The power of analogy is in its ability to direct our thinking (and general cognition) i.e. not in its ‘accuracy’ but in its ‘aptness’.

I have long argued that history should be included in considering research results and interpretations. For example, every ‘scientific’ proof of some fundamental deficiencies of women with respect to their role in society has turned out to be either inaccurate or non-scalable. So every new ‘proof’ of a ‘woman’s place’ needs to be treated with great skepticism. But that does not mean that one such proof does not exist. But it does mean that we shouldn’t base any policies on it until we are very very certain.

Side note: I think there’s an important difference between ‘substantial’ which implies extent and ‘substantive’ which only implies volition.

I note the four categories for government policy effectiveness: harmful, ineffective, uncertain, effective. There is, by my count, just one positive category in there. Does “ineffective” mean negligible in effect or a judgement that policy attempts were futile? As a matter of principle no government policy could be very effective? As several other comments imply, this sounds like ideology masquerading as science.

Besides, what is “government” here? Are we presupposing a US context here (in discussing _global_ warming, mark you)? National, regional, local?

I’ll comment on the one place where i know something. i’d argue that govt. policy towards radon was more than trivial. the epa’s pronouncement of guidelines has meant, in effect, that houses in the radon belt are routinely tested at time of sale, and houses with radon exposure are either remediated or sell for less than those that pass. i doubt that this would have happened without the epa guidelines – they are formalized in the requirements of most (All?) of the home inspection trade groups, for instance. without standards, there’d be nothing to formalize in an inspection.

Pingback: Pervasiveness of Obliging Metaphors in Thought and Deed | Metaphor Hacker