Peter Dodds, Lewis Mitchell, Andrew Reagan, and Christopher Danforth write:

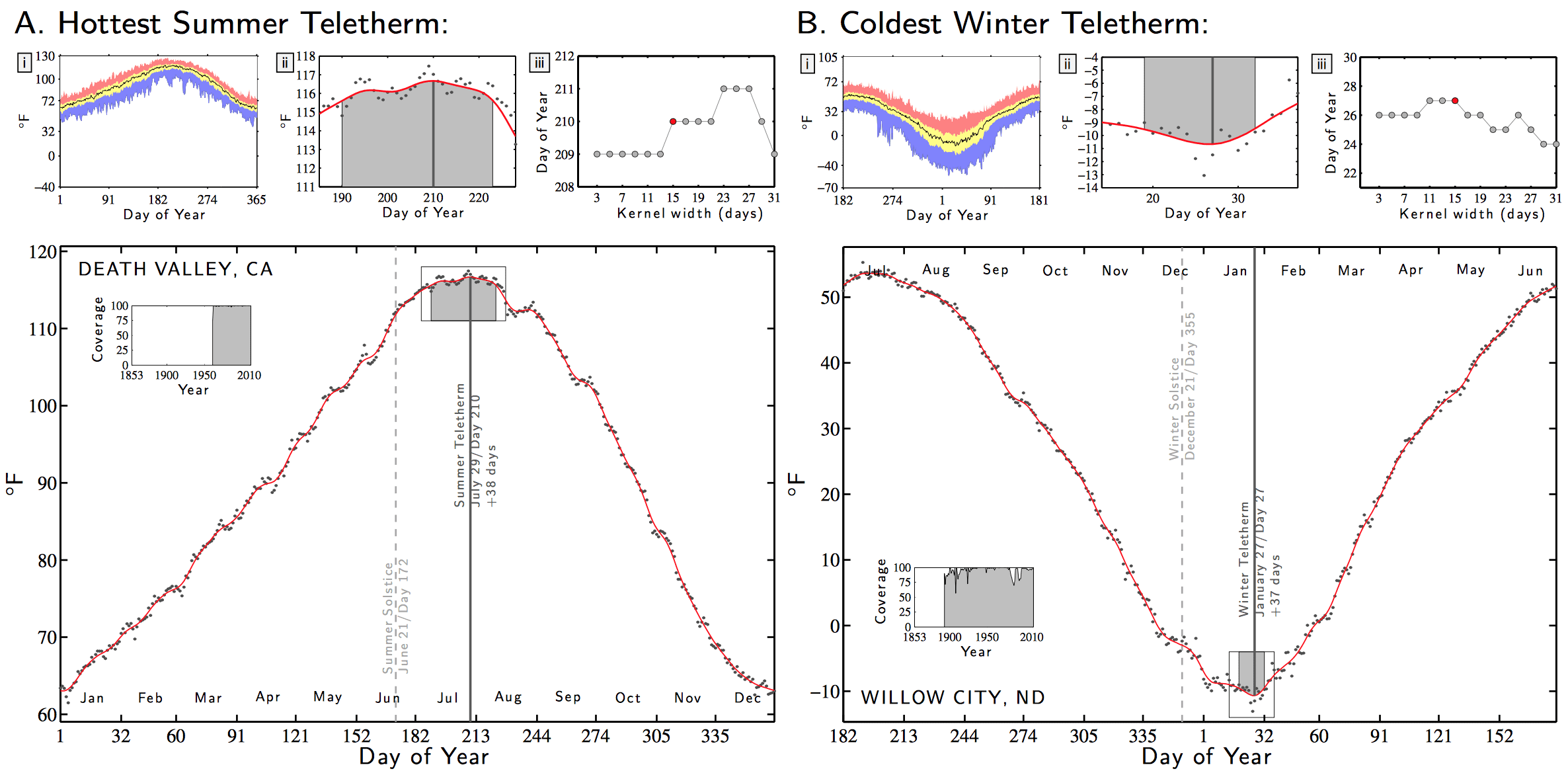

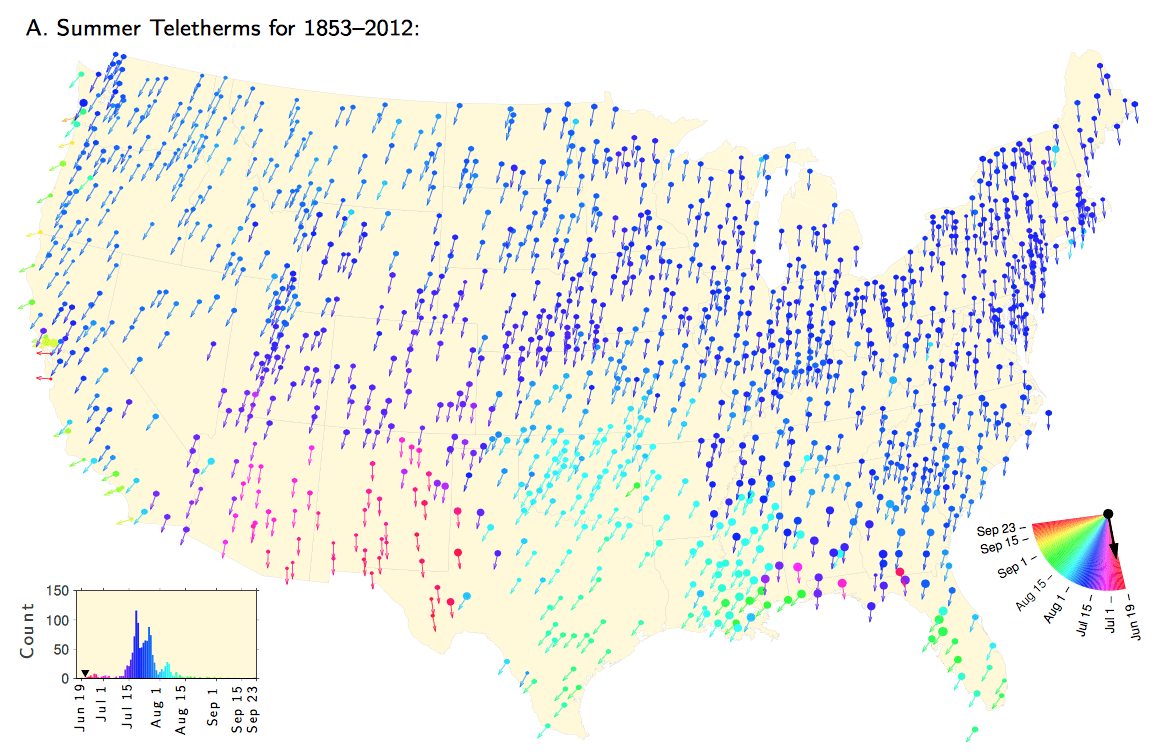

We introduce, formalize, and explore what we believe are fundamental climatological and seasonal markers: the Summer and Winter Teletherm—the on-average hottest and coldest days of the year. We measure the Teletherms using 25 and 50 year averaging windows for 1218 stations in the contiguous United States and find strong, sometimes dramatic, shifts in Teletherm date, temperature, and extent. For climate change, Teletherm dynamics have profound implications for ecological, agricultural, and social systems, and we observe clear regional differences, as well as accelerations and reversals in the Teletherms.

Of course, the hottest and coldest days of the year are not themselves so important, but I think the idea is to have a convenient one-number summary for each season that allows us to track changes over time.

One reason this is important is because one effect of climate change is to mess up synchrony across interacting species (for example, flowers and bugs, if you’ll forgive my lack of specific biological knowledge).

Dodds et al. put a lot of work into this project. For some reason they don’t want to display simple time series plots of how the teletherms move forward and backward over the decades, but they do have this sort of cool plot showing geographic variation in time trends.

You’ll have to read their article to understand how to interpret this graph. But that’s ok, not every graph can be completely self-contained.

They also have lots more graphs and discussion here.

Without having read their article or anything, just based on those two graphs of the hottest/coldest days, I’m going to disagree with their methodology.

They’re looking for a maximum or minimum, the technique of using kernel estimates is going to be noisy and depend on the kernel. I think it makes sense to locally approximate the time-series with a quadratic of the form Temp = T_maxmin +- Tvar/2*((day-teleday)/telescale)^2 with priors on the teleday based on the solstice date and the Tvar based on the overall scale of variation at the location of interest, and telescale being the average timescale over which the quadratic fits well across the whole country.

The kernel method is going to be noisy, and the local polynomial (taylor series) is going to work well to regularize the estimates, and you can pool things together based on making Tvar and telescale partially pooled geographically etc.

So, it looks like they plan to upload their code and data, which is great. Also, I agree with them about how the general concept of variation in the hottest/coldest day of the year is a good idea to track. Once they have their data up, I’m tempted to compare their estimates with a Stan model using the taylor series approach with spatial gaussian process priors on the coefficients. I suspect the variations will be significantly reduced.

For example, looking at the willow city graph, it’s pretty clear that the date chosen is based on a small time scale fluctuation of around 3 or 4 days, if the location of that kind of fluctuation can vary by 10 or 20 days randomly due to weather in a given year, then you’re going to pick it up, even though it’s probably not meaningful, whereas if you fit a quadratic to a longer time scale of fluctuation you’re going to pick up a more stable date, whose shift over decades etc will be more relevant for issues like the biological one you mention.

They use a 25- and 50-year averaging window, which of course will help a lot with stabilizing things. But I agree with your general point. Another way they (or someone) could consider stabilizing things is to look at (1) a longer timescale than a day, and/or (2) something less extreme than “maximum” or “minimum”. For instance, if they look at, say, the THIRD-hottest and THIRD-coldest WEEKS of the year, they could afford to use a shorter averaging window. Also, when it comes to things like crop health and wildfire danger and even human health, it seems like a week is a more relevant timescale than a day.

I was going to say “…they might not want to do this for reasons that essentially come down to PR: the method seems just a bit more complicated to explain, and the results will be a bit less extreme.” But although the first of these is true, the second might not be: as I mentioned, they could afford to use a shorter averaging window so perhaps the changes in recent decades would show up more strongly.

I agree with you here. I’m very interested to get their data off Github and do some alternative analyses. You can see in some of their supplements that they get a “bifurcation” of the teletherm, which I suspect is probably due to this noise. The truth is more like there’s a couple weeks or so where hot stuff happens, and the specifics of that weather is causing noise and bifurcation.

I think an obvious dimensionless ratio of interest is the scale of variation from day to day relative to the scale of variation from high to low in a given day as averaged across wide regions. The daily variation averaged across wide regions is something that there’s lots of biological adaptation to (nocturnal creatures vs diurnal for example). So using that inherent scale to decide what constitutes the “hottest period” rather than the “hottest day” would be more inherently justified. In my taylor series type model, the “within the day variation” is more or less a way to set “Tvar” whereas the period over which the daily trend varies by a similar amount is more or less “telescale”

Phil:

The authors of the paper could comment better than I could on this, but my impression is that they’re purposely creating a grabby 1-number summary, not so much for PR (unless you consider “PR” in a very general sense of the term) but rather because the data are so overwhelming that it can make sense to have a quick number that can give a sense of spatial and temporal variability. It’s similar to how Bill James would define player-specific statistics such as “runs created” which would facilitate further between-player analyses.

But, yes, regarding Phil’s other point: I do think that it would make sense to use shorter windows in the context of some Bayesian procedure that would produce efficient estimates using partial pooling.

Yay to them for (intending to) put up their data on GitHub. I’ll take a look when it appears and see if there’s a reasonable Bayesian model-based way to extract the same type of summary. The 15 day gaussian is a good first pass, and super easy to compute, but it’s worthwhile to look at other ideas too.

I agree with you, finding good summaries of the data is a great first step towards doing more in-depth analysis.

25-50 year averaging window:

Actually, the red curve is a 15 day gaussian kernel-weighted average. They take the day on which that 15 day gaussian kernel hits its peak as the teletherm day. They justify the use of a 15 day kernel width with a sensitivity analysis (the plot in the upper right of their layout). So for plot A and B above you can see that their chosen 15 day width is plotted in red and right on the edge of a jump for both plots.

Their technique is simple and lets them start to explore, but it’s definitely noisy.

Oh, that’s much better than I expected, though…not unlike choosing the hottest two-week period. I’ll read what they say before I implicitly criticize it more!

> Their technique is simple and lets them start to explore, but it’s definitely noisy.

I disagree. I look at their sensitivity plot and conclude that their result is pretty insensitive to choice of kernel width. What’s your working definition of noisy?

Well, I’d rather play with their data than analyze things based on just a couple of graphs. But If you look at their website you’ll find that they plot the teletherm days against year for tons of stations, lots of stations have “bifurcations” where the teletherm day bounces around back and forth maybe 10 days. I suspect this is largely noise. In general I think they might do well to use a “teletherm interval” where they describe the time period where the filtered temperature is within some narrow limit of the maximum for the year. This will emphasize the fact that basically what we’re talking about is a period of time when it’s the “hottest all year” and because it’s at a maximum, small deviations in time mean basically no important deviation in temperature.

Whenever you’re finding a maximum y = f(x)+noise, because the function f(x) is at a max/min and it therefore doesn’t change much during a largish interval, a small fluctuation in the noise can mean a large fluctuation in x* the location of the maximum. Their sensitivity analysis shows that they can get a range of several days for kernels between 1 and 30 days. But a kernel with 30 days bandwidth has most of its weight in the first standard deviation which because of their definition of bandwidth is more or less 30/4 = 7.5 days. I definitely think they should look at sensitivity plots for averaging +- a lot longer than 7.5 days standard deviation. Like maybe 30 days standard deviation or 120 days of width according to their formula.

365/4 = 91.25 which is more or less the “duration of summer” so a standard deviation of maybe 45 days makes some sense as an upper end, and their W would be 180 days or so under that scale.

anyway, I think they’ve done a good job of getting started on this analysis, and they’ve described an important issue. Figuring out related good ways to measure and describe the concept can be a next-step.

In general, won’t a dataset as large and rich as the climate dataset allow for many, very creative, synthetic metrics of relevance with appealing post hoc justifications?

How long until someone comes up with an analysis of the time-rate-of-change-of-teletherm?

Rahul:

Yes, definitely. With climate as with baseball, it’s good for people to look at data from many perspectives and do all sorts of modeling, post-processing, etc. I do think that the rate of change of the teletherm could be a useful summary; indeed, I suggested to Dodds that they make time series plots of their estimated teletherms. Ultimately this could all be folded into a model, but often it is a useful intermediate step to postprocess inferences and see what you get.

I agree with you, it makes sense to look in many ways. I think Rahul’s point is more or less that in the absence of some kind of physical model for teletherms you can’t claim that you understand teletherms. I agree with that too!

Contrary to the typical approach lampooned on the blog (shirts and cycles, boy/girl birth rates, fat arms and politics etc) so long as you don’t claim to know THE TRUTH about a topic, searching for the truth via a lot of exploratory data analysis is a legit thing to do. It’s only once you really do have a couple of competing causal theories that you need to narrow them down, get explicit differences in the predictions, and look for evidence that can rule one or the other out.

Also, when all you have is a single historical archive, you’re inevitably going to re-analyze the same dataset in light of different models. Accidental over-fitting or finding evidence for wacky theories (groundhogs predict the duration of winter) etc is an issue… there’s no way to get around it entirely.

One question is at what point does one publish? Is every exploratory data analysis publishable?

Once published, people seem to forget that an article could have been exploratory.

In fact, do authors make it easy for a reader to see whether a work was exploratory?

I went on a rant about publication, but deleted it before I posted it. Basically, refereed journal publication is crap on a stick. Put all your data and code and your opinions about it on a site like Github. The only thing refereed journal articles add is a dick-measuring contest to help decide which monkey gets the grant funding bananas.

> The only thing refereed journal articles add is a dick-measuring contest to help decide which monkey gets the grant funding bananas.

I have a new favorite mixed metaphor :-)

+1

Hi Andrew. Thanks for the post!

In fact, we now have oodles of plots for how the Teletherms evolve at each station (using 10, 25, and 50 year averages).

Links to these and three example plots are shown here:

http://compstorylab.org/share/papers/dodds2015c/places.html

And we’re going to add these graphs to the bottom of the online site so that when you mouse over a station, a version of these time series will appear below the map.

Re having a model for Teletherms—well, that would be fantastic but this work is a start to opening up such a possibility. The regional variations are quite detailed so it would seem that local geography could come into play, and the work might move into quite specific modeling. The paper on the arXiv is a first public draft and I hope we make it clear that what we’re doing is describing something well, or attempting to.

My initial reaction to the paper is that I like it quite a bit. Two things that I’m still puzzling out:

1) Looking at the SE in Figs. 5a and 5b, there are dramatic swings in both Summer and Winter teletherms over relatively short geographic scales. For example, see MS, AL, and GA. What are the temperature changes associated with those shifts? Are the changes in max and min temp small, comparable, or large relative to other geographic regions where the teletherm exhibit less spatial variation?

2) The main plots in Figs. 2 and 3 show average extreme temperature over a fifty-year period? That’s what I’m taking away from the text but there are low amplitude oscillations with period (roughly) a little less than a month. I’m really surprised that you’d month-scale oscillations in data averaged over a 50 year period. Am I misinterpreting the plot? If I’m not then what the origin of those oscillations?

A “one number summary” of climate change makes as much sense as, the much vilified, one-number-summary produced by NHST?

Rahul:

It’s one number per location and year. These one numbers can then be post-processed. It makes as much sense as summarizing each state legislative election by a “partisan bias” and “electoral responsiveness” numbers which can then be post-processed, to take an example from my own research.

The problem with (the usual use of) p-values is not that they are a data reduction. The problem is that a p-value isn’t much of a measure of anything useful. It’s not a particularly good data summary. As with sabermetrics, some data summaries are better than others.

Suppose you take the teletherm shifts and estimate a continuous surface using a thin plate spline*. And let’s say you compute a weight for each point which is a function the teletherm duration and/or the magnitude of the temperature change. What does that continuous surface look like?**

* GCV for determination of regularization parameter. Ref = http://www.image.ucar.edu/pub/nychka/manuscripts/OV1.pdf

** A TPS surface may not be the best choice as there can be very long range contributions to any given point. Maybe a kernel method or local 2D spline fit?

There are boundaries below (coldest) and above (hottest) which have incredibly important effects on plant growth in general and agriculture.