Bob links to the recently celebrated physics paper.

Check it out:

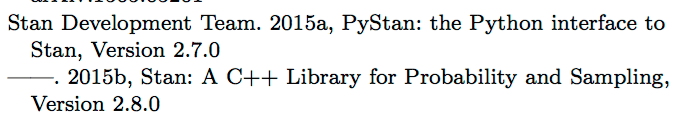

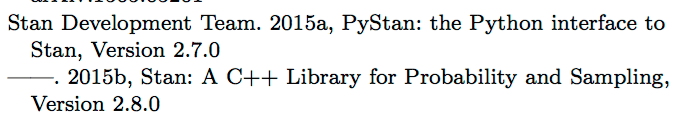

And in the references:

This is even better than being quoted in Private Eye!

P.S. More here.

Bob links to the recently celebrated physics paper.

Check it out:

And in the references:

This is even better than being quoted in Private Eye!

P.S. More here.

To be pedantic, this is a follow up analysis of GW150914 after a discovery was claimed using another analysis chain that did not include Stan. That said, it’s still awesome and hopefully Stan will continue to be useful to LIGO as the gravitational waves come rolling in!

Best to read over the specs of the primary searches first (https://dcc.ligo.org/public/0123/P1500269/012/LIGO-P1500269_GW150914_CBC_Search.pdf). But if Stan can handle a 4-parameter matched-filtering search on time series data sampled at 16k in near real-time, then I’d be happy to point you to the people that need to hear what you have to say. Though I do understand if you find annoying the fact that the parameter estimation team’s effort wrote their own versions of MCMC samplers from scratch.

Matt, has the LIGO team published an estimate of the probability GW150914 was a false positive? I’ve discovered there are many papers regarding this discovery that the information is spread over, but I have not yet seen that one.

For future reference: https://www.ligo.caltech.edu/page/detection-companion-papers

My short answer: kinda…

In section V of the detection paper from PRL, the authors discuss a computed quantity called the False Alarm Probability [FAP] (one or more noise events as strong as X during the analysis time). For the primary *unmodeled* transient search, this is quoted at < 2×10^-6. Two separate modeled CBC transient searches were run on this data, but the more conservative bound on the FAP is quoted in the detection paper, ala < 2×10^-7.

I personally cannot stand FAP as conceived as a detection threshold statistic for a variety of reasons but it seems to work… No doubt there are probably better ways to do this but the SOP currently in the field is what you see in the detection paper. The supplementary papers are filled with data quality consistency checks and Bayes parameter estimation runs, so don't think this discovery rests solely on a single statistic.

Cheers

Thanks for the link, without knowing how many papers there were it was getting overwhelming.

>”the authors discuss a computed quantity called the False Alarm Probability…I personally cannot stand FAP as conceived as a detection threshold statistic”

I agree that this is a problematic choice, FAP is another name for “p-value”. If there is one thing I know for sure, it is that p is the most misleading number.

Although there is a strong parallel between p-value and FAP, there are also differences. Radars have repeated trials—or continuous operation depending on how you look at it—and the FAP is more like a poisson arrival rate (reciprocal really).

I think the approach that the LIGO analysts used is quite common in radar and communications engineering—as you said it is more-or-less SOP in the field.

Consider constant false-alarm-rate radars (CFAR radars). https://en.wikipedia.org/wiki/Constant_false_alarm_rate where they manipulate the decision threshold in order to keep the rate of false alarms at an acceptable level.

There are some issues of human factors engineering that accompany use of this concept in radar systems—or did accompany use of this concept in the early days.

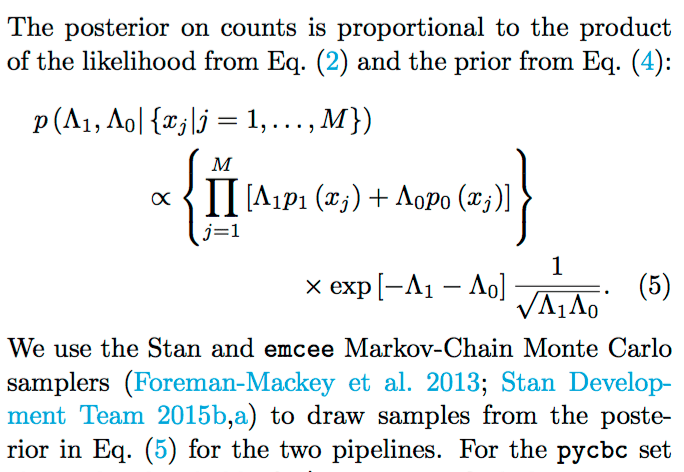

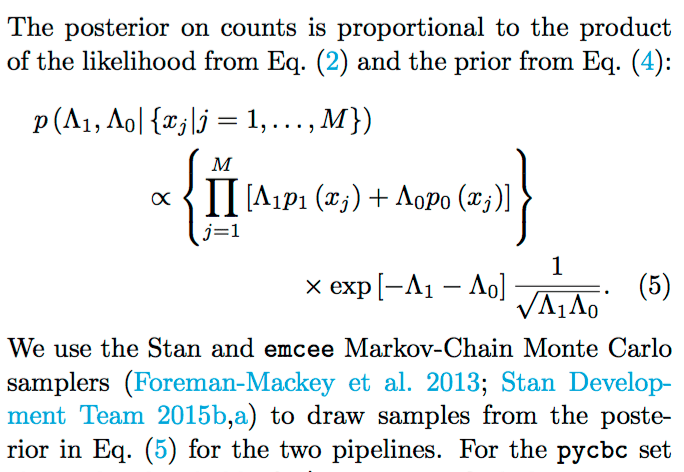

From the linked “Rates” paper:

“Previous estimates of the BBH merger rate based on electromagnetic observations and analytic population modeling…spans more than three orders of magnitude, from 0.1-300 Gpc-3 yr-1.”

The lower bound on the prior estimate of true signals may be near the “false alarm probability” (which has the same interpretation as a p-value). Once you take into account only 16 days of observation, range of less than 1 Gpc (range depends on the mass and detector sensitivity at the moment), signals lost due to various filtering that goes on, etc this value can get pretty low.

In such cases the “false alarm probability” leads to very different conclusions from “probability the signal is a false alarm”. Yea it’s unlikely this is was a spurious correlation, but perhaps even more unlikely they would detect something so quickly. I have no idea, since this issue seems to have been ignored.

This consideration is missing from papers I have seen, instead in eg the main (PRL) paper there is just a long discussion of “false alarm probability” that has no clear purpose (unless you have confused the two different probabilities…). There is no discussion of “probability the signal is a false alarm”, which is what they actually care about.

If I understand correctly the paper cited there, that range of estimates for the BBH merger rate can be translated to a “detection rate” of 0.4-1000 events per year. It doesn’t seem close to the reported “false alarm rate” of 0.000005 events per year.

“these estimates are also consistent with the broad range of rate predictions reviewed in Abadie et al. (2010) with only the low end ([less than] 1 Gpc-3 yr-1) of rate predictions being excluded.”

There it is already assumed that GW150914 was a real signal. I don’t understand why they didn’t perform the same analysis without that assumption, so there is no circular reasoning, and use it to estimate the probability the signal was a false positive.

– Anoneuoid @16/02/16-13:19

First on the back of the envelope estimates of event rates from signals and noise. Given an Monte Carlo simulated signal set, the mean effective volume for the two CBC searches are 0.130 and 0.21 Gpc^3 yr [table 2 of Rates paper]. If I took the lower bound on the estimated signal rate, the mean number of signals would be of the order 10^-2. And remember for the reported values from the two modeled searches, those were upper bounds on the FAP. So yeah, the probability of at least 1 signal at that strength and at least 1 noise coincidence aren’t close. The issue isn’t ignored, though maybe not discussed in the clearest fashion.

I am pretty confident that search and rates teams have not confused p(x|H_b) with p(H_b|x), so the intent was as it says it is. The FAP, as I can tell, is primarily used as a threshold detection statistic to justify further investigations. I’d prefer a Bayes factor B_(s,b) but as I mentioned before, there is a tradition for this sort of analysis. And well done Bob on picking out the heritage of this sort of thing as coming from RF signal processing.

>”Given an Monte Carlo simulated signal set, the mean effective volume for the two CBC searches are 0.130 and 0.21 Gpc^3 yr [table 2 of Rates paper]. If I took the lower bound on the estimated signal rate, the mean number of signals would be of the order 10^-2.”

Correct me if I’m wrong, but the prior rate estimates of 0.1-300 Gpc^-3 yr^-1 were for mergers of “steller mass black holes”, of which GW150914 is supposed to be an exceptionally large example (ie rare and easy to detect). So the rates for events with properties similar to GW150914 will be somewhat less. I don’t know if this would be 10x, 100x, or what.

They write (Rates paper): “GW150914 is unusually significant; only 8% of the astrophysical distribution of sources with a FAR 15 smaller than one per century will be more significant than GW150914.” So it is in the top 10% of an already exclusive club, but I don’t know how to convert this to use with the prior estimate.

>”The FAP, as I can tell, is primarily used as a threshold detection statistic to justify further investigations.”

I agree that this is fine, and do not even believe this signal was due to a spurious correlation. However, it does not justify many paragraphs in the main detection paper about FAP, while ruling out other real terrestrial sources got around one sentence. It was that emphasis that made me think there is some confusion here, perhaps not by the analysis teams but by someone editing the papers.

Another thing. The values for effective volume in that table seem to be based on using a cutoff of “FARs [less than] 1/century”. So the false alarm rate cutoff being used is 10^-2 events/yr, and your back of the napkin true positive rate is 10^-2 events/yr (which as noted above I think must be an overestimate). Not sure if I’m interpreting this correctly.

People writing their own samplers are often the hardest to convince to use other methods/code. But I am more than content that Stan is in the hands of Will and his crew — if anyone can show how successful Stan is it’s them.

“People writing their own samplers are often the hardest to convince to use other methods/code.”

Really? I worked for a marketing research firm for 6 years implementing a variety of Bayesian models for them, and although initially I was writing my own samplers, I jumped at the chance to automate the process once Stan became available.

I used to write my own samplers; I only expect to do that again if I can’t use Stan for something.

That’s awesome!

I hope the data becomes eventually. It’d be great to play with it and the Stan programs they used.

becomes [available] eventually

If you click the reference link I included in a previous comment and go to the “Use LIGO Data” link, you will find the data for this event. Plus there is a lot of nice code which was used to produce results you see in some of the papers.

This is fantastic! Is it possible to provide us the Stan programs used as well?

I imagine it would be possible but as only a former LIGO member, I can only point you in the right direction. The LOSC has a email address and they pass along the necessary software. Or you could poke someone at your local LIGO group (http://geco.markalab.org/ for those @Columbia).

Data release for event GW150914

https://losc.ligo.org/events/GW150914/

Dang, ninja’d by Matt W!

Nothing wrong with having the actual link in the comments. The data being available is in part an NSF directive that some mirrors the protocol for the many NASA supported telescopes. At some point the entirety of this short observing run will be open-data but I cannot recall what the lag will be. I am kind of curious what groups will try to tackle the data and attempt replicate or improve upon the results.

If anyone is really interested in seeing if anything is lurking deep in the noise, the entirety of the 2009-10 science run is available via the LOSC.