How large is that treatment effect, really?

Andrew Gelman, Department of Statistics and Department of Political Science, Columbia University

“Unbiased estimates” aren’t really unbiased, for a bunch of reasons, including aggregation, selection, extrapolation, and variation over time. Econometrics typically focus on causal identification, with this goal of estimating “the” effect. But we typically care about individual effects (not “Does the treatment work?” but “Where and when does it work?” and “Where and when does it hurt?”). Estimating individual effects is relevant not only for individuals but also for generalizing to the population. For example, how do you generalize from an A/B test performed on a sample right now to possible effects on a different population in the future? Thinking about variation and generalization can change how we design and analyze experiments and observational studies. We demonstrate with examples in social science and public health.

“He had acquired his belief not by honestly earning it in patient investigation, but by stifling his doubts. And although in the end he may have felt so sure about it that he could not think otherwise, yet inasmuch as he had knowingly and willingly worked himself into that frame of mind, he must be held responsible for it.”

Ron Bloom points us to this wonderful article, “The Ethics of Belief,” by the mathematician William Clifford, also known for Clifford algebras. The article is related to some things I’ve written about evidence vs. truth (see here and here) but much more beautifully put. Here’s how it begins:

A shipowner was about to send to sea an emigrant-ship. He knew that she was old, and not overwell built at the first; that she had seen many seas and climes, and often had needed repairs. Doubts had been suggested to him that possibly she was not seaworthy. These doubts preyed upon his mind, and made him unhappy; he thought that perhaps he ought to have her thoroughly overhauled and refitted, even though this should put him to great expense. Before the ship sailed, however, he succeeded in overcoming these melancholy reflections. He said to himself that she had gone safely through so many voyages and weathered so many storms that it was idle to suppose she would not come safely home from this trip also. He would put his trust in Providence, which could hardly fail to protect all these unhappy families that were leaving their fatherland to seek for better times elsewhere. He would dismiss from his mind all ungenerous suspicions about the honesty of builders and contractors. In such ways he acquired a sincere and comfortable conviction that his vessel was thoroughly safe and seaworthy; he watched her departure with a light heart, and benevolent wishes for the success of the exiles in their strange new home that was to be; and he got his insurance-money when she went down in mid-ocean and told no tales.

What shall we say of him? Surely this, that he was verily guilty of the death of those men. It is admitted that he did sincerely believe in the soundness of his ship; but the sincerity of his conviction can in no wise help him, because he had no right to believe on such evidence as was before him. He had acquired his belief not by honestly earning it in patient investigation, but by stifling his doubts. And although in the end he may have felt so sure about it that he could not think otherwise, yet inasmuch as he had knowingly and willingly worked himself into that frame of mind, he must be held responsible for it.

Clifford’s article is from 1877!

Bloom writes:

One can go over this in two passes. One pass may be read as “moral philosophy.”

But the second pass helps one think a bit about how one ought to make precise the concept of ‘relevance’ in “relevant evidence.”

Specifically (this is remarkably deficient in the Bayesian corpus I find) I would argue that when we say “all probabilities are relative to evidence” and write the symbolic form straightaway P(A|E) we are cheating. We have not faced the fact — I think — that not every “E” has any bearing (“relevance”) one way or another on A and that it is *inadmissible* to combine the symbols because it is so easy to write ’em down. Perhaps one evades the problem by saying, well what do you *think* is the case. Perhaps you might say, “I think that E is irrelevant if P(A|E) = P(A|~E).” But that begs the question: it says in effect that *both* E and ~E can be regarded as “evidence” for A. I argue that easily leads to nonsense. To regard any utterance or claim as “evidence” for any other utterance or claim leads to absurdities. Here for instance:

A = “Water ice of sufficient quantity to maintain a lunar base will be found in the spectral analysis of the plume of the crashed lunar polar orbiter.”

E = If there are martians living on the Moon of Jupiter, Europa, then they celebrate their Martian Christmas by eating Martian toast with Martian jam.

Is E evidence for A? is ~E evidence for A? Is any far-fetched hypothetical evidence for any other hypothetical whatsoever?

Just to provide some “evidence” that I am not being entirely facetious about the Lunar orbiter; I attach also a link to now much superannuated item concerning that very intricate “experiment” — I believe in the end there was some spectral evidence turned up consistent with something like a teaspoon’s worth of water-ice per 25 square Km.

P.S. Just to make the connection super-clear, I’d say that Clifford’s characterization, “He had acquired his belief not by honestly earning it in patient investigation, but by stifling his doubts. And although in the end he may have felt so sure about it that he could not think otherwise, yet inasmuch as he had knowingly and willingly worked himself into that frame of mind, he must be held responsible for it,” is an excellent description of those Harvard professors who notoriously endorsed the statement, “the replication rate in psychology is quite high—indeed, it is statistically indistinguishable from 100%.” Also a good match to those Columbia administrators who signed off on those U.S. News numbers. In neither case did a ship go down; it’s the same philosophical principle but lower stakes. Just millions of dollars involved, no lives lost.

As Isaac Asimov put it, “A robot may not injure a human being or, through inaction, allow a human being to come to harm.” Sometimes that inaction is pretty damn active, when a shipowner or a scientific researcher or a university administrator puts in some extra effort to avoid looking at some pretty clear criticisms.

Here’s something you should do when beginning a project, and in the middle of a project, and in the end of the project: Clearly specify your goals, and also specify what’s not in your goal set.

Here’s something from from Witold’s slides on baggr, an R package (built on Stan) that does hierarchical modeling for meta-analysis:

Overall goals:

1. Implement all basic meta-analysis models and tools

2. Focus on accessibility, model criticism and comparison

3. Help people avoid basic mistakes

4. Keep the framework flexible and extend to more models(Probably) not our goal:

5. Build a package for people who already build their models in Stan

I really like this practice of specifying goals. This is so basic that it seems like we should always be doing it—but so often we don’t! Also I like the bit where he specifies something that’s not in his goals.

Again, this all seems so natural when we see it, but it’s something we don’t usually do. We should.

People have needed rituals to turn data into truth for many years. Why would we be surprised if many people now need procedural reforms to work?

This is Jessica. How to weigh metascience or statistical reform proposals has been on my mind more than usual lately as a result of looking into and blogging about the Protzko et al. paper on rigor-enhancing practices. Seems it’s also been on Andrew’s mind.

Like Andrew, I have been feeling “bothered by the focus on procedural/statistical ‘rigor-enhancing practices’ of ‘confirmatory tests, large sample sizes, preregistration, and methodological transparency’” because I suspect researchers are taking it to heart that these steps will be enough to help them produce highly informative experiments.

Yesterday I started thinking about it via an analogy to writing. I heard once that if you’re trying to help someone become a better writer, you should point out no more than three classes of things they’re doing wrong at one time, because too much new information can be self-defeating.

Imagine you’re a writing consultant and people bring you their writing and you advise them on how to make it better. Keeping in mind the need to not overwhelm, initially maybe you focus on the simple things that won’t make them a great writer, but are easy to teach. “You’re doing comma splices, your transitions between paragraphs suck, you’re doing citation wrong.” You talk up how important these things are to get right to get them motivated, and you bite your tongue when it comes to all the other stuff they need help with to avoid discouraging them.

Say the person goes away and fixes the three things, then comes back to you with some new version of what they’ve written. What do you do now? Naturally, you give them three more things. Over time as this process repeats, you eventually get to the most nuanced stuff that is harder to get right but ultimately more important to their success as a writer.

But for this approach to work presupposes that your audience either intrinsically cares enough about improving to keep coming back, or that they have some outside reason they must keep coming back, like maybe they are a Ph.D. student and their advisor is forcing their hand. What if you can never be sure when someone walks in the door that they will come back a second time after you give your advice? In fact, what if the people who really need your help with their writing are bad writers because they fixated on the superficial advice they got in middle school or high school that boils good writing down to a formula, and considered themselves done? And now they’re looking for the next quick fix, so they can go back to focusing on whatever they are actually interested in and treating writing as a necessary evil?

Probably they will latch onto the next three heuristics you give them and consider themselves done. So if we suspect the people we are advising will be looking for easy answers, it seems unlikely that we are going to get them there using the approach above where we give them three simple things and we talk up the power of these things to make them good writers. Yet some would say this is what mainstream open science is doing, by giving people simple procedural reforms (just preregister, just use big samples, etc) and talking up how they help eliminate replication problems.

I like writing as an analogy for doing experimental social science because both are a kind of wicked problem where there are many possible solutions, and the criteria for selecting between them are nuanced. There are simple procedural things that are easier to point out, like the comma splices or lacking transitions between paragraphs in writing, or not having a big enough sample or invalidating your test by choosing it posthoc in experimental science. But avoiding mistakes at this level is not going to make you a good writer, just like enacting simple procedural heuristics are not going to make you a good experimentalist or modeler. For that you need to adopt an orientation that acknowledges the inherent difficulty of the task and prompts you to take a more holistic approach

Figuring out how to encourage that is obviously not easy. But one reason that starting with the simple procedural stuff (or broadly applicable stuff, as Nosek implies the “rigor-enhancing practices” are), seems insufficient to me is that I don’t necessarily think there’s a clear pathway from the simple formulaic stuff to the deeper stuff, like the connection between your theory and what you are measuring and how you are measuring it and how you specify and select among competing models. I actually think things make more sense to go the opposite way, from why inference from experimental data is necessarily very hard as a result of model misspecification, effect heterogeneity, measurement error etc. to the ingredients that have to be in place for us to even have a chance, like sufficient sample size and valid confirmatory tests. The problem is that one can understand the concepts of preregistration or sufficient sample size while still having a relatively simple mental model of effects as real or fake and questionable research practices as the main source of issues.

In my own experience, the more I’ve thought about statistical inference from experiments over the years, the more seriously I take heterogeneity and underspecification/misspecification, to the point that I’ve largely given up doing experimental work. This is an extreme outcome of course, but I think we should expect that the more one recognizes how hard the job really is, the less likely one is to firehose the literature in one’s field with a bunch of careless dead-in-the-water style studies. As work by Berna Devezer and colleagues has pointed out, open science proposals are often subject to the same kinds of problems such as overconfident claims and reliance on heuristics that contributed to the replication crisis in the first place. This solution-ism (a mindset I’m all too familiar with as a computer scientist) can be counterproductive.

Hey, some good news for a change! (Child psychology and Bayes)

Erling Rognli writes:

I just wanted to bring your attention to a positive stats story, in case you’d want to feature it on the blog. A major journal in my field (the Journal of Child Psychology and Psychiatry) has over time taken a strong stance for using Bayesian methods, publishing an editorial in 2016 advocating switching to Bayesian methods:

And recently following up with inviting myself and some colleagues to write a brief introduction to Bayesian methods (where we of course recommend Stan):

I think this consistent editorial support really matters for getting risk-averse researchers to start using new methods, so I think the editors of the JCPP deserve recognition for contributing to improving statistical practice in this field.

No reason to think that Bayes and Stan will, by themselves, transform child psychology, but I think it’s a step in the right direction. As Rubin used to say, one advantage of Bayes is that the work you do to set up the model represents a bridge between experiment, data, and scientific understanding. It’s getting you to think about the right questions.

Evilicious 3: Face the Music

A correspondent forwards me this promotional material that appeared in his inbox:

Hello hello, I am happy to announce that my new book MISBELIEF is out today!

Do you have a friend or family member who changed in some dramatic ways in the last few years? So, much so that you no longer understand them? Over the last few years I have been on a journey into some of the darkest corners of the internet, trying to make sense of what was happening around us. In MISBELIEF, which is out this week, I weave my personal story and research in an effort to shed light on this complex and important process. Misbeliefs are not just about other people, they are also about our own beliefs. This book will allow you to question your own beliefs, and how well you really know what you think you know. More generally, and important for society, this book is also about trust, the trust crisis we are in, and what it means for us as we go into the next election season. You can learn more about the book here and here. If you decide you want to read the book, this page includes an option to order a copy for yourself + a copy to be donated to an educator. The book can also be found at Amazon, BAM, or Barnes & Noble.

And if you like the book, please leave a review on Amazon – it will help other people find the book.

I hope you enjoy the book.

Irrationally yours,

Dan

“Misbeliefs are not just about other people, they are also about our own beliefs.” Indeed.

I wonder if this new book includes the shredder story.

P.S. The book has blurbs from Yuval Harari, Arianna Huffington, and Michael Shermer (the professional skeptic who assures us that he has a haunted radio). This thing where celebrities stick together . . . it’s nuts!

P.P.S. The good news is that there’s already some new material for the eventual sequel. And it’s “preregistered”! What could possibly go wrong?

What is the prevalence of bad social science?

Someone pointed me to this post from Jonatan Pallesen:

Frequently, when I [Pallesen] look into a discussed scientific paper, I find out that it is astonishingly bad.

• I looked into Claudine Gay’s 2001 paper to check a specific thing, and I find out that research approach of the paper makes no sense. (https://x.com/jonatanpallesen/status/1740812627163463842)

• I looked into the famous study about how blind auditions increased the number of women in orchestras, and found that the only significant finding is in the opposite direction. (https://x.com/jonatanpallesen/status/1737194396951474216)

• The work of Lisa Cook was being discussed because of her nomination to the fed. @AnechoicMedia_ made a comment pointing out a potential flaw in her most famous study. And indeed, the flaw was immediately obvious and fully disqualifying. (https://x.com/jonatanpallesen/status/1738146566198722922)

• The study showing judges being very affected by hunger? Also useless. (https://x.com/jonatanpallesen/status/1737965798151389225)

These studies do not have minor or subtle flaws. They have flaws that are simple and immediately obvious. I think that anyone, without any expertise in the topics, can read the linked tweets and agree that yes, these are obvious flaws.

I’m not sure what to conclude from this, or what should be done. But it is rather surprising to me to keep finding this.

My quick answer is, at some point you should stop being surprised! Disappointed, maybe, just not surprised.

A key point is that these are not just any papers, they’re papers that have been under discussion for some reason other than their potential problems. Pallesen, or any of us, doesn’t have to go through Psychological Science and PNAS every week looking for the latest outrage. He can just sit in one place, passively consume the news, and encounter a stream of prominent published research papers that have clear and fatal flaws.

Regular readers of this blog will recall dozens more examples of high-profile disasters: the beauty-and-sex-ratio paper, the ESP paper and its even more ridiculous purported replications, the papers on ovulation and clothing and ovulation and voting, himmicanes, air rage, ages ending in 9, the pizzagate oeuvre, the gremlins paper (that was the one that approached the platonic ideal of more corrections than data points), the ridiculously biased estimate of the effects of early-childhood intervention, the air pollution in China paper and all the other regression discontinuity disasters, much of the nudge literature, the voodoo study, the “out of Africa” paper, etc. As we discussed in the context of that last example, all the way back in 2013 (!), the problem is closely related to these papers appearing in top journals:

The authors have an interesting idea and want to explore it. But exploration won’t get you published in the American Economic Review etc. Instead of the explore-and-study paradigm, researchers go with assert-and-defend. They make a very strong claim and keep banging on it, defending their claim with a bunch of analyses to demonstrate its robustness. . . . High-profile social science research aims for proof, not for understanding—and that’s a problem. The incentives favor bold thinking and innovative analysis, and that part is great. But the incentives also favor silly causal claims. . . .

So, to return to the question in the title of this post, how often is this happening? It’s hard for me to say. On one hand, ridiculous claims get more attention; we don’t spend much time talking about boring research of the “Participants reported being hungrier when they walked into the café (mean = 7.38, SD = 2.20) than when they walked out [mean = 1.53, SD = 2.70, F(1, 75) = 107.68, P < 0.001]" variety. On the other hand, do we really think that high-profile papers in top journals are that much worse than the mass of published research?

I expect that some enterprising research team has done some study, taking a random sample of articles published in some journals and then looking at each paper in detail to evaluate its quality. Without that, we can only guess, and I don’t have it in me to hazard a percentage. I’ll just say that it happens a lot—enough so that I don’t think it makes sense to trust social-science studies by default.

My correspondent also pointed me to a recent article in Harvard’s student newspaper, “I Vote on Plagiarism Cases at Harvard College. Gay’s Getting off Easy,” by “An Undergraduate Member of the Harvard College Honor Council,” who writes:

Let’s compare the treatment of Harvard undergraduates suspected of plagiarism with that of their president. . . . A plurality of the Honor Council’s investigations concern plagiarism. . . . when students omit quotation marks and citations, as President Gay did, the sanction is usually one term of probation — a permanent mark on a student’s record. A student on probation is no longer considered in good standing, disqualifying them from opportunities like fellowships and study-abroad programs. Good standing is also required to receive a degree.

What is striking about the allegations of plagiarism against President Gay is that the improprieties are routine and pervasive. She is accused of plagiarism in her dissertation and at least two of her 11 journal articles. . . .

In my experience, when a student is found responsible for multiple separate Honor Code violations, they are generally required to withdraw — i.e., suspended — from the College for two semesters. . . . We have even voted to suspend seniors just about to graduate. . . .

There is one standard for me and my peers and another, much lower standard for our University’s president.

This echoes what Jonathan Bailey has written here and here at his blog Plagiarism Today:

Schools routinely hold their students to a higher and stricter standard when it comes to plagiarism than they handle their faculty and staff. . . .

To give an easy example. In October 2021, W. Franklin Evans, who was then the president of West Liberty University, was caught repeated plagiarizing in speeches he was giving as President. Importantly, it wasn’t past research that was in dispute, it was the work he was doing as president.

However, though the board did vote unanimously to discipline him, they also voted against termination and did not clarify what discipline he was receiving.

He was eventually let go as president, but only after his contract expired two years later. It’s difficult to believe that a student at the school, if faced with a similar pattern of plagiarism in their coursework, would be given that same chance. . . .

The issue also isn’t limited to higher education. In February 2020, Katy Independent School District superintendent Lance Hindt was accused of plagiarism in his dissertation. Though he eventually resigned, the district initially threw their full sport behind Hindt. This included a rally for Lindth that was attended by many of the teachers in the district.

Even after he left, he was given two years of salary and had $25,000 set aside for him if he wanted to file a defamation lawsuit.

There are lots and lots of examples of prominent faculty committing scholarly misconduct and nobody seems to care—or, at least, not enough to do anything about it. In my earlier post on the topic, I mentioned the Harvard and Yale law professors, the USC medical school professor, the Princeton history professor, the George Mason statistics professor, and the Rutgers history professor, none of whom got fired. And I’d completely forgotten about the former president of the American Psychological Association and editor of Perspectives on Psychological Science who misrepresented work he had published and later was forced to retract—but his employer, Cornell University, didn’t seem to care. And the University of California professor who misrepresented data and seems to have suffered no professional consequences. And the Stanford professor who gets hyped by his university while promoting miracle cures and bad studies. And the dean of engineering at the University of Nevada. Not to mention all the university administrators and football coaches who misappropriate funds and then are quietly allowed to leave on golden parachutes.

Another problem is that we rely on the news media to keep these institutions accountable. We have lots of experience with universities (and other organizations) responding to problems by denial; the typical strategy appears to be to lie low and hope the furor will go away, which typically happens in the absence of lots of stories in the major news media. But . . . the news media have their own problems: little problems like NPR consistently hyping junk science and big problems like Fox pushing baseless political conspiracy theories. And if you consider podcasts and Ted talks to be part of “the media,” which I think they are—I guess as part of the entertainment media rather than the news media, but the dividing line is not sharp—then, yeah, a huge chunk of the media is not just susceptible to being fooled by bad science and indulgent of academic misconduct, it actually relies on bad science and academic misconduct to get the wow! stories that bring the clicks.

To return to the main thread of this post: by sanctioning students for scholarly misconduct but letting its faculty and administrators off the hook, Harvard is, unfortunately, following standard practice. The main difference, I guess, is that “president of Harvard” is more prominent than “Princeton history professor” or “Harvard professor of constitutional law” or “president of West Liberty University” or “president of the American Psychological Association” or “UCLA medical school professor” or all the others. The story of the Harvard president stays in the news, while those others all receded from view, allowing the administrators at those institutions to follow the usual plan of minimizing the problem, saying very little, and riding out the storm.

Hey, we just got sidetracked into a discussion of plagiarism. This post was supposed to be about bad research. What can we say about that?

Bad research is different than plagiarism. Obviously, students don’t get kicked out for doing bad research, using wrong statistical methods, losing their data, making claims that defy logic and common sense, claiming to modify a paper shredder that has never existed, etc etc etc. That’s the kind of behavior that, if your final paper also has formatting problems, will get you slammed with a B grade and that’s about it.

When faculty are found to have done bad research, the usual reaction is not to give them a B or to do the administrative equivalent—lowering their salary, perhaps?, or removing them from certain research responsibilities, maybe making them ineligible to apply for grants?—but rather to pretend that nothing happened. The idea is that, once an article has been published, you draw a line under it and move onward. It’s considered in bad taste—Javert-like, even!—to go back and find flaws in papers that are already resting comfortably in someone’s C.V. As Pallesen notes, so often when we do go back and look at those old papers, we find serious flaws. Which brings us to the question in the title of this post.

P.S. The paper by Claudine Gay discussed by Pallesen is here; it was published in 2001. For more on the related technical questions involving the use of ecological regression, I recommend this 2002 article by Michael Herron and Kenneth Shots (link from Pallesen) and my own article with David Park, Steve Ansolabehere, Phil Price, and Lorraine Minnite, “Models, assumptions, and model checking in ecological regressions,” from 2001.

“AI” as shorthand for turning off our brains. (This is not an anti-AI post; it’s a discussion of how we think about AI.)

Before going on, let me emphasize that, yes, modern AI is absolutely amazing—self-driving cars, machines that can play ping-pong, chessbots, computer programs that write sonnets, the whole deal! Call it machine intelligence or whatever, it’s amazing.

What I’m getting at in this post is the way in which attitudes toward AI fit into existing practices in science and other aspects of life.

This came up recently in comments:

“AI” does not just refer to a particular set of algorithms or computer programs but also to the attitude in which an algorithm or computer program is idealized to the extent that people think it’s ok for them to rely on it and not engage their brains.

Some examples of “AI” in that sense of the term:

– When people put a car on self-driving mode and then disengage from the wheel.

– When people send out a memo produced by a chatbot without reading and understanding it first.

– When researchers use regression discontinuity analysis or some other identification strategy and don’t check that their numbers make any sense at all.

– When journal editors see outrageous claims backed “p less than 0.05” and then just push the Publish button.“AI” is all around us, if you just know where to look!

One thing that interests me here is how current expectations of AI in some ways match and in some ways go beyond past conceptions in science fiction. The chatbot, for example, is pretty similar to all those talking robots, and I guess you could imagine a kid in such a story asking his robot to do his homework for him. Maybe the difference is that the robot is thought to have some sort of autonomy, along which comes some idiosyncratic fallibility (if only that the robot is too “logical” to always see clearly to the solution of a problem), whereas an AI is considered more of an institutional product with some sort of reliability, in the same sense that every bottle of Coca-Cola is the same. Maybe that’s the connection to naive trust in standardized statistical methods.

This also relates to the idea that humans used to be thought of as the rational animal but now are viewed as irrational computers. In the past, our rationality was considered to be what separates us from the beasts, either individually or through collective action, as in Locke and Hobbes. If the comparison point is animals, then our rationality is a real plus! Nowadays, though, it seems almost the opposite: if the comparison point is a computer, then what makes us special is not our rationality but our emotions.

There is no golden path to discovery. One of my problems with all the focus on p-hacking, preregistration, harking, etc. is that I fear that it is giving the impression that all will be fine if researchers just avoid “questionable research practices.” And that ain’t the case.

Following our recent post on the latest Dishonestygate scandal, we got into a discussion of the challenges of simulating fake data and performing a pre-analysis before conducting an experiment.

You can see it all in the comments to that post—but not everybody reads the comments, so I wanted to repeat our discussion here. Especially the last line, which I’ve used as the title of this post.

Raphael pointed out that it can take some work to create a realistic simulation of fake data:

Do you mean to create a dummy dataset and then run the preregistered analysis? I like the idea, and I do it myself, but I don’t see how this would help me see if the endeavour is doomed from the start? I remember your post on the beauty-and-sex ratio, which proved that the sample size was far too small to find an effect of such small magnitude (or was it in the Type S/Type M paper?). I can see how this would work in an experimental setting – simulate a bunch of data sets, do your analysis, compare it to the true effect of the data generation process. But how do I apply this to observational data, especially with a large number of variables (number of interactions scales in O(p²))?

I elaborated:

Yes, that’s what I’m suggesting: create a dummy dataset and then run the preregistered analysis. Not the preregistered analysis that was used for this particular study, as that plan is so flawed that the authors themselves don’t seem to have followed it, but a reasonable plan. And that’s kind of the point: if your pre-analysis plan isn’t just a bunch of words but also some actual computation, then you might see the problems.

In answer to your second question, you say, “I can see how this would work in an experimental setting,” and we’re talking about an experiment here, so, yes, it would’ve been better to have simulated data and performed an analysis on the simulated data. This would require the effort of hypothesizing effect sizes, but that’s a bit of effort that should always be done when planning a study.

For an observational study, you can still simulate data; it just takes more work! One approach I’ve used, if I’m planning to fit data predicting some variable y from a bunch of predictors x, is to get the values of x from some pre-existing dataset, for example an old survey, and then just do the simulation part for y given x.

Raphael replied:

Maybe not the silver bullet I had hoped for, but now I believe I understand what you mean.

To which I responded:

There is no silver bullet; there is no golden path to discovery. One of my problems with all the focus on p-hacking, preregistration, harking, etc. is that I fear that it is giving the impression that all will be fine if researchers just avoid “questionable research practices.” And that ain’t the case.

Again, this is not a diss on preregistration. Preregistration does one thing; it’s not intended to fix bad aspects of the culture of science such as the idea that you can gather a pile of data, grab some results, declare victory, go on the Ted talk circuit based only on the very slender bit of evidence that you seem to have been able to reject that the data came from a specific random number generator. That line of reasoning, where rejection of straw-man null hypothesis A is taken as evidence in favor of preferred alternative B, is wrong—but it’s not preregistration’s fault that people think that way!

P-hacking can be bad (but the problem here, in my view, is not in performing multiple analyses but rather in reporting only one of them rather than analyzing them all together); various questionable research practices are, well, questionable; and preregistration can help with that, either directly (by motivating researchers to follow a clear plan) or indirectly (by allowing outsiders to see problems in post-publication review, as here).

I am, however, bothered by the focus on procedural/statistical “rigor-enhancing practices” of “confirmatory tests, large sample sizes, preregistration, and methodological transparency.” Again, the problem is if researchers mistakenly think that following such advice will place them back on that nonexistent golden path to discovery.

So, again, I recommend to make assumptions, simulate fake data, and analyze these data as a way of constructing a pre-analysis plan, before collecting any data. That won’t put you on the golden path to discovery either!

All I can offer you here is blood, toil, tears and sweat, along with the possibility that a careful process of assumptions/simulation/pre-analysis will allow you to avoid disasters such as this ahead of time, thus avoiding the consequences of: (a) fooling yourself into thinking you’ve made a discovery, (b) wasting the time and effort of participants, coauthors, reviewers, and postpublication reviewers (that’s me!), and (c) filling the literature with junk that will later be collected in a GIGO meta-analysis and promoted by the usual array of science celebrities, podcasters, and NPR reporters.

Aaaaand . . . in the time you’ve saved from all of that could be repurposed into designing more careful experiments with clearer connections between theory and measurement. Not a glide along the golden path to a discovery; more of a hacking through the jungle of reality to obtain some occasional glimpses of the sky.

It’s Ariely time! They had a preregistration but they didn’t follow it.

I have a story for you about a success of preregistration. Not quite the sort of success that you might be expecting—not a scientific success—but a kind of success nonetheless.

It goes like this. An experiment was conducted. It was preregistered. The results section was written up in a way that reads as if the experiment worked as planned. But if you go back and forth between the results section and the preregistration plan, you realize that the purportedly successful results did not follow the preregistration plan. They’re just the usual story of fishing and forking paths and p-hacking. The preregistration plan was too vague to be useful, also the authors didn’t even bother to follow it—or, if they did follow it, they didn’t bother to write up the results of the preregistered analysis.

As I’ve said many times before, there’s no reason that preregistration should stop researchers from doing further analyses once they see their data. The problem in this case is that the published analysis was not well justified either from a statistical or a theoretical perspective, nor was it in the preregistration. Its only value appears to be as a way for the authors to spin a story around a collection of noisy p-values.

On the minus side, the paper was published, and nowhere in the paper does it say that the statistical evidence they offer from their study does not come from the preregistration. In the abstract, their study is described as “pre-registered,” which isn’t a lie—there’s a pregistration plan right there on the website—but it’s misleading, given that the preregistration does not line up with what’s in the paper.

On the plus side, outside readers such as ourselves can see the paper and the preregistrations and draw our own conclusions. It’s easier to see the problems with p-hacking and forking paths when the analysis choices are clearly not in the preregistration plan.

The paper

The Journal of Experimental Social Psychology recently published an article, “How pledges reduce dishonesty: The role of involvement and identification,” by Eyal Peer, Nina Mazar, Yuval Feldman, and Dan Ariely.

I had no idea that Ariely is still publishing papers on dishonesty! It says that data from this particular paper came from online experiments. Nothing involving insurance records or paper shredders or soup bowls or 80-pound rocks . . . It seems likely that, in this case, the experiments actually happened and that the datasets came from real people and have not been altered.

And the studies are preregistered, with the preregistration plans all available on the papers’ website.

I was curious about that. The paper had 4 studies. I just looked at the first one, which already took some effort on my part. The rest of you can feel free to look at Studies 2, 3, and 4.

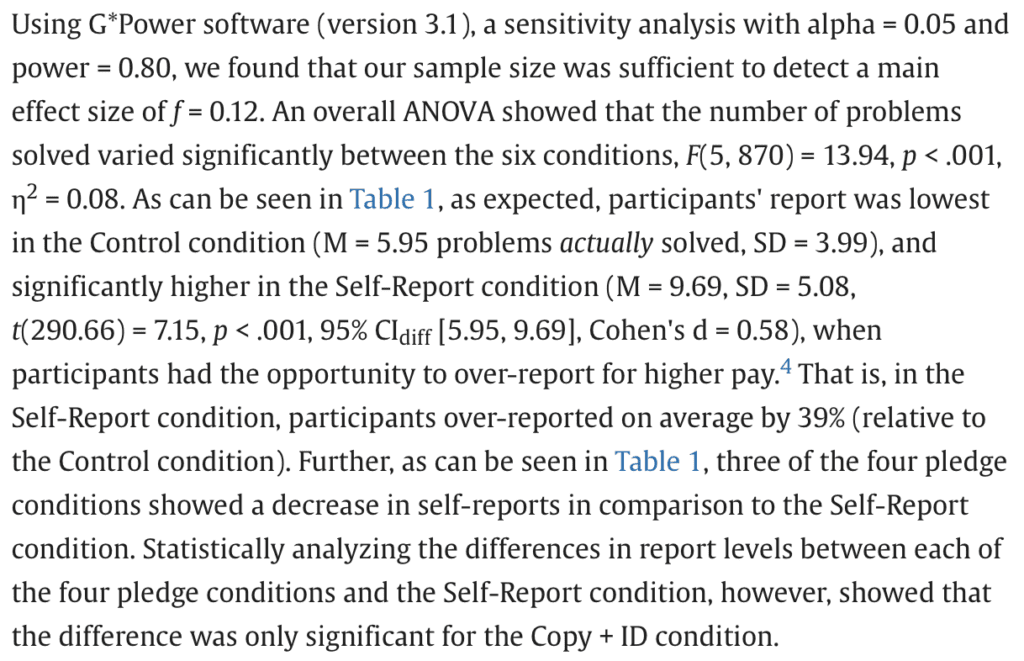

The results section and the preregistration

From the published paper:

The first study examined the effects of four different honesty pledges that did or did not include a request for identification and asked for either low or high involvement in making the pledge (fully-crossed design), and compared them to two conditions without any pledge (Control and Self-Report).

There were six conditions: one control (with no possibility to cheat), a baseline treatment (possibility and motivation to cheat and no honesty pledge), and four different treatments with honesty pledges.

This is what they reported for their primary outcome:

And this is how they summarize in their discussion section:

Interesting, huh?

Now let’s look at the relevant section of the preregistration:

Compare that to what was done in the paper:

– They did the Anova, but that was not relevant to the claims in the paper. The Anova included the control condition, and nobody’s surprised that when you give people the opportunity and motivation to cheat, that some people will cheat. That was not the point of the paper. It’s fine to do the Anova; it’s just more of a manipulation check than anything else.

– There’s something in the preregistration about a “cheating gap” score, which I did not see in the paper. But if we define A to be the average outcome under the control, B to be the average outcome under the baseline treatment, and C, D, E, F to be the average under the other four treatments, then I think the preregistration is saying they’ll define the cheating gap as B-A, and the compare this to C-A, D-A, E-A, and F-A. This is mathematically the same as looking at C-B, D-B, E-B, and F-B, which is what they do in the paper.

– The article jumps back and forth between different statistical summaries: “three of the four pledge conditions showed a decrease in self-reports . . . the difference was only significant for the Copy + ID condition.” It’s not clear what to make of it. They’re using statistical significance as evidence in some way, but the preregistration plan does not make it clear what comparisons would be done, how many comparisons would be made, or how they would be summarized.

– The preregistration plan says, “We will replicate the ANOVAs with linear regressions with the Control condition or Self-Report conditions as baseline.” I didn’t see any linear regressions in the results for this experiment in the published paper.

– The preregistration plan says, “We will also examine differences in the distribution of the percent of problems reported as solved between conditions using Kolmogorov–Smirnov tests. If we find significant differences, we will also examine how the distributions differ, specifically focusing on the differences in the percent of “brazen” lies, which are defined as the percent of participants who cheated to a maximal, or close to a maximal, degree (i.e., reported more than 80% of problems solved). The differences on this measure will be tested using chi-square tests.” I didn’t see any of this in the paper either! Maybe this is fine, because doing all these tests doesn’t seem like a good analysis plan to me.

How do we think of all the analyses stated in the preregistration plan that were not in the paper? Since these analyses were preregistered, I can only assume the authors performed them. Maybe the results were not impressive and so they weren’t included. I don’t know; I didn’t see any discussion of this in the paper.

– The preregistration plan says, “Lastly, we will explore interactions effects between the condition and demographic variables such as age and gender using ANOVA and/or regressions.” They didn’t report any of that either! Also there’s the weird “and/or” in the preregistration, which gives the researchers some additional degrees of freedom.

Not a moral failure

I continue to emphasize that scientific problems do not necessarily correspond to moral problems. You can be a moral person and still do bad science (honesty and transparency are not enuf); to put it another way, if I say that you make a scientific error or are sloppy in your science, I’m not saying you’re a bad person.

For me to say someone’s a bad person just because they wrote a paper and didn’t follow their preregistration plan . . . that would be ridiculous! Over 99% of my published papers have no preregistration plans; and, those that do have such plans, I’m pretty sure we didn’t exactly follow them in our published papers. That’s fine. The reason I do preregistration is not to protect my p-values; it’s just part of a larger process of hypothesizing about possible outcomes and simulating data and analysis as a prelude to measurement and data collection.

I think what happened in the “How pledges reduce dishonesty” paper is that the preregistration was both too vague and too specific. Too vague in that it did not include simulation and analysis of fake data, nor did it include quantitative hypotheses about effects and the distributions of outcomes, nor did it include anything close to what the authors ended up actually doing to support the claims in their paper. Too specific in that it included a bunch of analyses that the authors then didn’t think were worth reporting.

But, remember, science is hard. Statistics is hard. Even what might seem like simple statistics is hard. One thing I like about doing simulation-based design and analysis before collecting any data is that it forces me to make some of the hard choices early. So, yeah, it’s hard, and it’s no moral criticism of the authors of the above-discussed paper that they botched this. We’re all still learning. At the same time, yeah, I don’t think their study offers any serious evidence for the claims being made in that paper; it looks like noise mining to me. Not a moral failing; still, bad science in there being no good links between theory, effect sized, data collection, and measurement, which, as is often the case, leads to super-noisy results that can be interpreted in all sorts of ways to fit just about any theory.

Possible positive outcomes for preregistration

I think preregistration is great; again, it’s a floor, not a ceiling, on the data processing and analyses that can be done.

Here are some possible benefits of preregistration:

1. Preregistration is a vehicle for getting you to think harder about your study. The need to simulate data and create a fake world forces you to make hard choices and consider what sorts of data you might expect to see.

2. Preregistration with fake-data simulation can make you decide to redesign a study, or to not do it at all, if it seems that it will be too noisy to be useful.

3. If you already have a great plan for a study, preregistration can allow the subsequent analysis to be bulletproof. No need to worry about concerns of p-hacking if your data coding and analysis decisions are preregistered—and this also holds for analyses that are not based on p-values or significance tests.

4. A preregistered replication can build confidence in a previous exploratory finding.

5. Conversely, a preregistered study can yield a null result, for example if it is designed to have a high statistical power but then does not yield statistically significant preregistered results. Failure is not always as exciting or informative as success—recall the expression “big if true“—but it ain’t nothing.

6. Similarly, a preregistered replication can yield a null result. Again, this can be a disappointment but still a step in scientific learning.

7. Once the data appears, and the preregistered analysis is done, if it’s unsuccessful, this can lead the authors to change their thinking and to write a paper explaining that they were wrong, or maybe just to publish a short note saying that the preregistered experiment did not go as expected.

8. If a preregistered analysis fails, but the authors still try to claim success using questionable post-hoc analysis, the journal reviewers can compare the manuscript to the preregistration, point out the problem, and require that the article be rewritten to admit the failure. Or, if the authors refuse to do that, the journal can reject the article as written.

9. Preregistration can be useful in post-publication review to build confidence in published paper by reassuring readers who might have been concerned about p-hacking and forking paths. Readers can compare the published paper to the preregistration and see that it’s all ok.

10. Or, if the paper doesn’t follow the preregistration plan, readers can see this too. Again, it’s not a bad thing at all for the paper to go beyond the preregistration plan. That’s part of good science, to learn new things from the data. The bad thing is when a non-preregistered analysis is presented as if it were the preregistered analysis. And the good thing is that the reader can read the documents and see that this happened. As we did here.

In the case of this recent dishonesty paper, preregistration did not give benefit 1, nor did it give benefit 2, nor did it give benefits 3, 4, 5, 6, 7, 8, or 9. But it did give benefit 10. Benefit 10 is unfortunately the least of all the positive outcomes of preregistration. But it ain’t nothing. So here we are. Thanks to preregistration, we now know that we don’t need to take seriously the claims made in the published paper, “How pledges reduce dishonesty: The role of involvement and identification.”

For example, you should feel free to accept that the authors offer no evidence for their claim that “effective pledges could allow policymakers to reduce monitoring and enforcement resources currently allocated for lengthy and costly checks and inspections (that also increase the time citizens and businesses must wait for responses) and instead focus their attention on more effective post-hoc audits. What is more, pledges could serve as market equalizers, allowing better competition between small businesses, who normally cannot afford long waiting times for permits and licenses, and larger businesses who can.”

Huh??? That would not follow from their experiments, even if the results had all gone as planned.

There’s also this funny bit at the end of the paper:

I just don’t know whether to believe this. Did they sign an honesty pledge?

Overkill?

OK, it’s 2024, and maybe this all feels like shooting a rabbit with a cannon. A paper by Dan Ariely on the topic of dishonesty, published in an Elsevier journal, purporting to provide “guidance to managers and policymakers” based on the results of an online math-puzzle game? Whaddya expect? This is who-cares research at best, in a subfield that is notorious for unreplicable research.

What happened was I got sucked in. I came across this paper, and my first reaction was surprise that Ariely was still collaborating with people working on this topic. I would’ve thought that the crashing-and-burning of his earlier work on dishonesty would’ve made him radioactive as a collaborator, at least in this subfield.

I took a quick look and saw that the studies were preregistered. Then I wanted to see exactly what that meant . . . and here we are.

Once I did the work, it made sense to write the post, as this is an example of something I’ve seen before: a disconnect between the preregistration and the analyses in the paper, and a lack of engagement in the paper with all the things in the preregistration that did not go as planned.

Again, this post should not be taken as any sort of opposition to preregistration, which in this case led to positive outcome #10 on the above list. The 10th-best outcome, but better than nothing, which is what we would’ve had in the absence of preregistration.

Baby steps.

Supporting Bayesian modelling workflows with iterative filtering for multiverse analysis

There is a new paper in arXiv: “Supporting Bayesian modelling workflows with iterative filtering for multiverse analysis” by Anna Elisabeth Riha, Nikolas Siccha, Antti Oulasvirta, and Aki Vehtari.

Anna writes

An essential component of Bayesian workflows is the iteration within and across models with the goal of validating and improving the models. Workflows make the required and optional steps in model development explicit, but also require the modeller to entertain different candidate models and keep track of the dynamic set of considered models.

By acknowledging the existence of multiple candidate models (universes) for any data analysis task, multiverse analysis provides an approach for transparent and parallel investigation of various models (a multiverse) that makes considered models and their underlying modelling choices explicit and accessible. While this is great news for the task of tracking considered models and their implied conclusions, more exploration can introduce more work for the modeller since not all considered models will be suitable for the problem at hand. With more models, more time needs to be spent with evaluation and comparison to decide which models are the more promising candidates for a given modelling task and context.

To make joint evaluation easier and reduce the amount of models in a meaningful way, we propose to filter out models with largely inferior predictive abilities and check computation and reliability of obtained estimates and, if needed, adjust models or computation in a loop of changing and checking. Ultimately, we evaluate predictive abilities again to ensure a filtered set of models that contains only the models that are sufficiently able to provide accurate predictions. Just like we filter out coffee grains in a coffee filter, our suggested approach sets out to remove largely inferior candidates from an initial multiverse and leaves us with a consumable brew of filtered models that is easier to evaluate and usable for further analyses. Our suggested approach can reduce a given set of candidate models towards smaller sets of models of higher quality, given that our filtering criteria reflect characteristics of the models that we care about.

“Bayesian Workflow: Some Progress and Open Questions” and “Causal Inference as Generalization”: my two upcoming talks at CMU

I’ll be speaking twice at Carnegie Mellon soon.

CMU statistics seminar, Fri 5 Apr 2024, 2:15pm, in Doherty Hall A302:

Bayesian Workflow: Some Progress and Open Questions

The workflow of applied Bayesian statistics includes not just inference but also model building, model checking, confidence-building using fake data, troubleshooting problems with computation, model understanding, and model comparison. We would like to codify these steps in the realistic scenario in which researchers are fitting many models for a given problem. We discuss various issues including prior distributions, data models, and computation, in the context of ideas such as the Fail Fast Principle and the Folk Theorem of Statistical Computing. We also consider some examples of Bayesian models that give bad answers and see if we can develop a workflow that catches such problems. For background, see here: http://www.stat.columbia.edu/~gelman/research/unpublished/Bayesian_Workflow_article.pdf

CMU computer science seminar, Tues 9 Apr, 10:30am, in Gates Hillman Building 8102:

Causal Inference as Generalization

In causal inference, we generalize from sample to population, from treatment to control group, and from observed measurements to underlying constructs of interest. The challenge is that models for varying effects can be difficult to estimate from available data. For example, there is a conflict between two tenets of evidence-based medicine: (1) reliance on statistically significant estimates from controlled trials and (2) decision making for individual patients. There’s no way to get to step 2 without going beyond step 1. We discuss limitations of existing approaches to causal generalization and how it might be possible to do better using Bayesian multilevel models. For background, see here: http://www.stat.columbia.edu/~gelman/research/published/KennedyGelman_manuscript.pdf and here: http://www.stat.columbia.edu/~gelman/research/published/causalreview4.pdf and here: http://www.stat.columbia.edu/~gelman/research/unpublished/causal_quartets.pdf

In between the two talks is a solar eclipse. I hope there’s good weather in Cleveland on Monday at 3:15pm.

Bad parenting in the news, also, yeah, lots of kids don’t believe in Santa Claus

A recent issue of the New Yorker had two striking stories of bad parenting.

Margaret Talbot reported on a child/adolescent-care center in Austria from the 1970s that was run by former Nazis who were basically torturing the kids. This happened for decades. The focus of the story was a girl whose foster parents had abused her before sending her to this place. The creepiest thing about all of this was how normal it all seemed. Not normal to me, but normal to that society: abusive parents, abusive orphanage, abusive doctors, all of which fit into an authoritarian society. Better parenting would’ve helped, but it seems that all of these people were all trapped in a horrible system, supported by an entrenched network of religious, social, and political influences.

In that same issue of the magazine, Sheelah Kolhatkar wrote about the parents of crypto-fraudster Sam Bankman-Fried. This one was sad in a different way. I imagine that most parents don’t want their children to grow up to be criminals, but such things happen. The part of the story that seemed particularly sad to me was how the parents involved themselves in their son’s crimes. They didn’t just passively accept it—which would be bad enough, but, sure, sometimes kids just won’t listen and they need to learn their lessons on their own—; they very directly got involved, indeed profited from the criminal activity. What kind of message is that to send to your child? In some ways this is similar to the Austrian situation, in that the adults involved were so convinced in their moral righteousness. Anyway, it’s gotta be heartbreaking to realize that, not only did you not stop your child’s slide into crime, you actually participated in it.

Around the same time, the London Review of Books ran an article which motivated me to write them this letter:

Dear editors,

In his article in the 2 Nov 2023 issue, John Lanchester writes that financial fraudster Sam Bankman-Fried “grew up aware that his mind worked differently from most people’s. Even as a child he thought that the whole idea of Santa Claus was ridiculous.” I don’t know what things are like in England, but here in the United States it’s pretty common for kids to know that Santa Claus is a fictional character.

More generally, I see a problem with the idealization of rich people. It’s not enough to say that Bankman-Fried was well-connected, good at math, and had a lack of scruple that can be helpful in many aspects of life. He also has to be described as being special, so much that a completely normal disbelief in the reality of Santa Claus is taken as a sign of how exceptional he is.

Another example is Bankman-Fried’s willingness to gamble his fortune in the hope of even greater riches, which Lanchester attributes to the philosophy of effective altruism, rather than characterizing it as simple greed.

Yours

Andrew Gelman

New York

They’ve published my letters before (here and here), but not this time. I just hope that in the future they don’t take childhood disbelief in Santa Claus as a signal of specialness, or attribute a rich person’s desire for even more money to some sort of unusual philosophy.

“A passionate group of scientists determined to revolutionize the traditional publishing model in academia”

Jonathan Heppner saw our post, Refuted papers continue to be cited more than their failed replications: Can a new search engine be built that will fix this problem?, writes:

Continue reading

Paper cited by Stanford medical school professor retracted—but even without considering the reasons for retraction, this paper was so bad that it should never have been cited.

Last year we discussed a paper sent to us by Matt Bogard. The paper was called, “Impact of cold exposure on life satisfaction and physical composition of soldiers,” it appeared in the British Medical Journal, and Bogard was highly suspicious of it. As he put it at the time:

I don’t have full access to this article to know the full details and can’t seem to access the data link but with n = 49 split into treatment and control groups for these outcomes (also making gender subgroup comparisons) this seems to scream, That which does not kill my statistical significance only makes it stronger.

I took a look and I agreed that article was absolutely terrible. I guess it was better than most of the stuff published in the International Supply Chain Technology Journal, but that’s not saying much; indeed all it’s saying is that the paper strung together some coherent sentences.

Despite the paper being clearly very bad, it had been promoted by a professor at Stanford Medical School who has a side gig advertising this sort of thing:

What’s up with that?? Stanford’s supposed to be a serious university, no? I hate to see Stanford Medical School mixed up in this sort of thing.

News!

That article has been retracted:

The reason for the retraction is oddly specific. I think it would be enough for them to have just said they’re retracting the paper because it’s no good. As Gideon Meyerowitz-Katz put it:

To sum up – this is a completely worthless study that has no value whatsoever scientifically. It is quite surprising that it got published in its current form, and even more surprising that anyone would try to use it as evidence.

I agree that the study is worthless, even without the specific concerns that caused it to be retracted.

On the other hand, I’m not at all surprised that it got published, nor am I surprised that anyone would try to use it as evidence. Crap studies are published all the time, and they’re used as evidence all the time too.

By the way, if you are curious and want to take a look at the original paper:

You still gotta pay 50 bucks.

I wonder if that Stanford dude is going to announce the retraction of the evidence he touted for his claim that “deliberate cold exposure is great training for the mind.” I doubt it—if the quality of the evidence were important, he wouldn’t have cited the study in the first place—but who knows, I guess anything’s possible.

P.S. At this point, some people are gonna complain at how critical we all are. Why can’t we just let these people alone? I’ll give my usual answer, which is that (a) junk science is a waste of valuable resources and attention, and (b) bad science drives out the good. Somewhere there is a researcher who does good and careful work but was not hired at Stanford medical school because of not being flashy enough. Just like there are Ph.D. students in psychology whose work does not get published in Psychological Science because it can’t compete with clickbait crap like the lucky golf ball study.

As Paul Alper says, one should always beat a dead horse because the horse is never really dead.

“Randomization in such studies is arguably a negative, in practice, in that it gives apparently ironclad causal identification (not really, given the ultimate goal of generalization), which just gives researchers and outsiders a greater level of overconfidence in the claims.”

Dean Eckles sent me an email with subject line, “Another Perry Preschool paper . . .” and this link to a recent research paper that reports, “We find statistically significant effects of the program on a number of different outcomes of interest.” We’ve discussed Perry Preschool before (see also here), so I was coming into this with some skepticism. It turns out that this new paper is focused more on methods than on the application. It that begins:

This paper considers the problem of making inferences about the effects of a program on multiple outcomes when the assignment of treatment status is imperfectly randomized. By imperfect randomization we mean that treatment status is reassigned after an initial randomization on the basis of characteristics that may be observed or unobserved by the analyst. We develop a partial identification approach to this problem that makes use of information limiting the extent to which randomization is imperfect to show that it is still possible to make nontrivial inferences about the effects of the program in such settings. We consider a family of null hypotheses in which each null hypothesis specifies that the program has no effect on one of many outcomes of interest. Under weak assumptions, we construct a procedure for testing this family of null hypotheses in a way that controls the familywise error rate–the probability of even one false rejection–in finite samples. We develop our methodology in the context of a reanalysis of the HighScope Perry Preschool program. We find statistically significant effects of the program on a number of different outcomes of interest, including outcomes related to criminal activity for males and females, even after accounting for imperfections in the randomization and the multiplicity of null hypotheses.

I replied: “a family of null hypotheses in which each null hypothesis specifies that the program has no effect on one of many outcomes of interest . . .”: What the hell? This makes no sense at all.

Dean responded:

Yeah, I guess it is a complicated way of saying there’s a null hypothesis for each outcome…

To me this just really highlights the value of getting the design right in the first place — and basically always including well-defined randomization.

To which I replied: Randomization is fine, but I think much less important than measurement as a design factor. The big problem with all these preschool studies is noisy data. These selection-on-statistical-significance methods then combine with forking paths to yield crappy estimates. I’d say “useless estimates,” but I guess the estimates are useful in the horrible sense that they allow the promoters of these interventions to get attention and funding.

Randomization in such studies is arguably a negative, in practice, in that it gives apparently ironclad causal identification (not really, given the ultimate goal of generalization), which just gives researchers and outsiders a greater level of overconfidence in the claims. They’re following the “strongest link” reasoning, which is an obvious logical fallacy but that doesn’t stop it from driving so much policy research.

Dean:

Yes, definitely agreed that measurement is a key part of the design, as is choosing a sample size that has any hope of detecting reasonable effect sizes.

Me: Agreed. One reason I de-emphasize the importance of sample size is that researchers often seem to think that sample size is a quick fix. It goes like this: Researchers do a study and finds a result that’s 1.5 se’s from 0. So then they think that if they just increase sample size by a factor of (2/1.5)^2, they’ll get statistical significance. Or if their sample size was higher by a factor of (2.8/1.5)^2, that they’d have 80% power. And then they do the study, they manage to find that statistically significant result, and they (a) declare victory on their substantive claim and (b) think that their sample size is retrospectively justified, in the same way that a baseball manager’s decision to leave in the starting pitcher is justified if the outcome is a W.

So, the existence of the “just increase the sample size” option can be an enabler for bad statistics and bad science. My favorite example along those lines is the beauty-and-sex-ratio study, which used a seemingly-reasonable sample size of 3000 but would realistically need something like a million people or more to have any reasonable chance of detecting any underlying signal.

Dean:

Yes, that’s a good warning. Of course, for those who know better, obviously the original, extra noisy point estimate is not really helpful at all for power analysis. I think trying to use pilots to somehow get an initial estimate of an effect (rather than learn other things) is a common trap.

Yeah, and rules of thumbs about what a “big sample” is can lead you astray. I’ve run some experiments where if you’d run the same experiment with 100k people, it would have been hopeless. A couple slides from my recent course on this point are attached… involving the same “failed” expeirment as the second part of my recent post https://statmodeling.stat.columbia.edu/2023/10/16/getting-the-first-stage-wrong/

P.S. Regarding the title of this post, I’m not saying that randomization is always bad or usually bad or that it’s bad on net. What I’m saying is that it can be bad, and it can be bad in important situations, the kinds of settings where people want to find an effect.

To put it another way, suppose we start by looking at a study with randomization. Would it be better without random assignment, just letting participants in the study pick their treatments or having them assigned in some other way that would be subject to unmeasurable biases? No, of course not. Randomization doesn’t make a study worse. What it can do is give researchers and consumers of researchers an inappropriately warm and cozy feeling, leading them to not look at serious problems of interpretation of the results of the study, for example, extracting large and unreproducible results from small noisy samples and then using inappropriately applied statistical models to label such findings as “statistically significant.”

“Andrew, you are skeptical of pretty much all causal claims. But wait, causality rules the world around us, right? Plenty have to be true.”

Awhile ago, Kevin Lewis pointed me to this article that was featured in the Wall Street Journal. Lewis’s reaction was, “I’m not sure how robust this is with just some generic survey controls. I’d like to see more of an exogenous assignment.” I replied, “Nothing wrong with sharing such observational patterns. They’re interesting. I don’t believe any of the causal claims, but that’s ok, description is fine,” to which Lewis responded, “Sure, but the authors are definitely selling the causal claim.” I replied, “Whoever wrote that looks like they had the ability to get good grades in college. That’s about it.”

At this point, Alex Tabarrok, who’d been cc-ed on all this, jumped in to say, quite reasonably, “Andrew, you are skeptical of pretty much all causal claims. But wait, causality rules the world around us, right? Plenty have to be true.”

I replied to Alex as follows:

There are lots of causal claims that I believe! For this one, there are two things going on. First, do I think the claim is true? Maybe, maybe not, I have no idea. I certainly wouldn’t stake my reputation on a statement that the claim is false. Second, how relevant do I think this sort of data and analysis are to this claim? My answer: a bit relevant but not very. When I think about the causal claims that I believe, my belief is usually not coming from some observational study.

Regarding, “Plenty have to be true.” Yup, and that includes plenty of statements that are the opposite of what’s claimed to be true. For example, a few years ago a researcher preregistered a claim that exposure to poor people would cause middle-class people to have more positive views regarding economic redistribution policies. The researcher then did a study, found the opposite result (not statistically significant, but whatever), and then published the results and claimed that exposure to poor people would reduce middle-class people’s support for redistribution. So what do I believe? I believe that for most people, an encounter (staged or otherwise) with a person on the street would have essentially no effects on their policy views. For some people in some settings, though, the encounter could have an effect. Sometimes it could be positive, sometimes negative. In a large enough study it would be possible to find an average effect. The point is that plenty of things have to be true, but estimating average causal effects won’t necessarily find any of these things. And this does not even get into the difficulty with the study linked by Kevin, where the data are observational.

Or, for another example, sure, I believe that early childhood intervention can be effective in some cases. That doesn’t give me any obligation to believe the strong claims that have been made on its behalf using flawed data analysis.

To put it another way: the authors of all these studies should feel free to publish their claims. I just think lots of these studies are pretty random. Randomness can be helpful. Supposedly Philip K. Dick used randomization (the I Ching) to write some of this books. In this case, the randomization was a way to jog his imagination. Similarly, it could be that random social science studies are useful in that they give people an excuse to think about real problems, even if the studies themselves are not telling us what the researchers claim.

Finally, I think there’s a problem in social science that researchers are pressured to make strong causal claims that are not supported by their data. It’s a selection bias. Researchers who just make descriptive claims are less likely to get published in top journals, get newspaper op-eds, etc. This is just some causal speculation of my own: if the authors of this recent study had been more clear (to themselves and to others) that their conclusions are descriptive, not causal, none of us would’ve heard about the study in the first place.

Summary

There’s a division of labor in metascience as well as in science. I lean toward skepticism, to the extent that there must be cases where I don’t get around to thinking seriously about new ideas or results that are actually important. Alex leans toward openness, to the extent that there must be cases where he goes through the effort of working out the implications of results that aren’t real. It’s probably a good thing that the science media includes both of us. We play different roles in the system of communication.

Every time Tyler Cowen says, “Median voter theorem still underrated! Hail Anthony Downs!”, I’m gonna point him to this paper . . .

Here’s Cowen’s post, and here’s our paper:

Moderation in the pursuit of moderation is no vice: the clear but limited advantages to being a moderate for Congressional elections

Andrew Gelman Jonathan N. Katz

September 18, 2007

It is sometimes believed that is is politically risky for a congressmember to go against his or her party. On the other hand, Downs’s familiar theory of electoral competition holds that political moderation is a vote-getter. We analyze recent Congressional elections and find that moderation is typically worth less about 2% of the vote. This suggests there is a motivation to be moderate, but not to the exclusion of other political concerns, especially in non-marginal districts. . . .

Banning the use of common sense in data analysis increases cases of research failure: evidence from Sweden

Olle Folke writes:

I wanted to highlight a paper by an author who has previously been featured on your blog when he was one of the co-authors of a paper on the effect of strip clubs on sex crimes in New York. This paper looks at the effect of criminalizing the buying of sex in Sweden and finds a 40-60% increase. However, the paper is equally problematic as the one on strip clubs. In what I view as his two main specifications he using the timing of the ban to estimate the effect. However, while there is no variation across regions he uses regional data to estimate the effect, which of course does not make any sense. Not surprisingly there is no adjustment for the dependence of the error term across observations.

What makes this analysis particularly weird is that there actually is no shift in the outcome if we use national data (see figure below). So basically the results must have been manufactured. As the author has not posted any replication files it is not possible to figure out what he has done to achieve the huge increase.

I think that his response to this critique is that he has three alternative estimation methods. However, these are not very convincing and my suspicion is that neither those results would hold up for scrutiny. Also, I find the use of alternative methods both strange and problematic. First, it suggests that neither method is convincing it itself. However, doing four additional problematic analysis does not make the first one better. Also, it gives author an out when they are criticized as it involves a lot of labor to work through each analysis (especially when there is not replication data).

I took a look at the linked paper, and . . . yeah, I’m skeptical. The article begins:

This paper leverages the timing of a ban on the purchase of sex to assess its impact on rape offenses. Relying on Swedish high-frequency data from 1997 to 2014, I find that the ban increases the number of rapes by around 44–62%.

But the above graph, supplied by Folke, does not show any apparent effect at all. The linked paper has a similar graph using monthly data that also shows

nothing special going on at 1999:

This one’s a bit harder to read because of the two axes, the log scale, and the shorter time frame, but the numbers seem similar. In the time period under study, the red curve is around 5.0 on the log scale per month, 12*log(5) = 1781, and the annual curve is around 2000, so that seems to line up.

So, not much going on in the aggregate. But then the paper says:

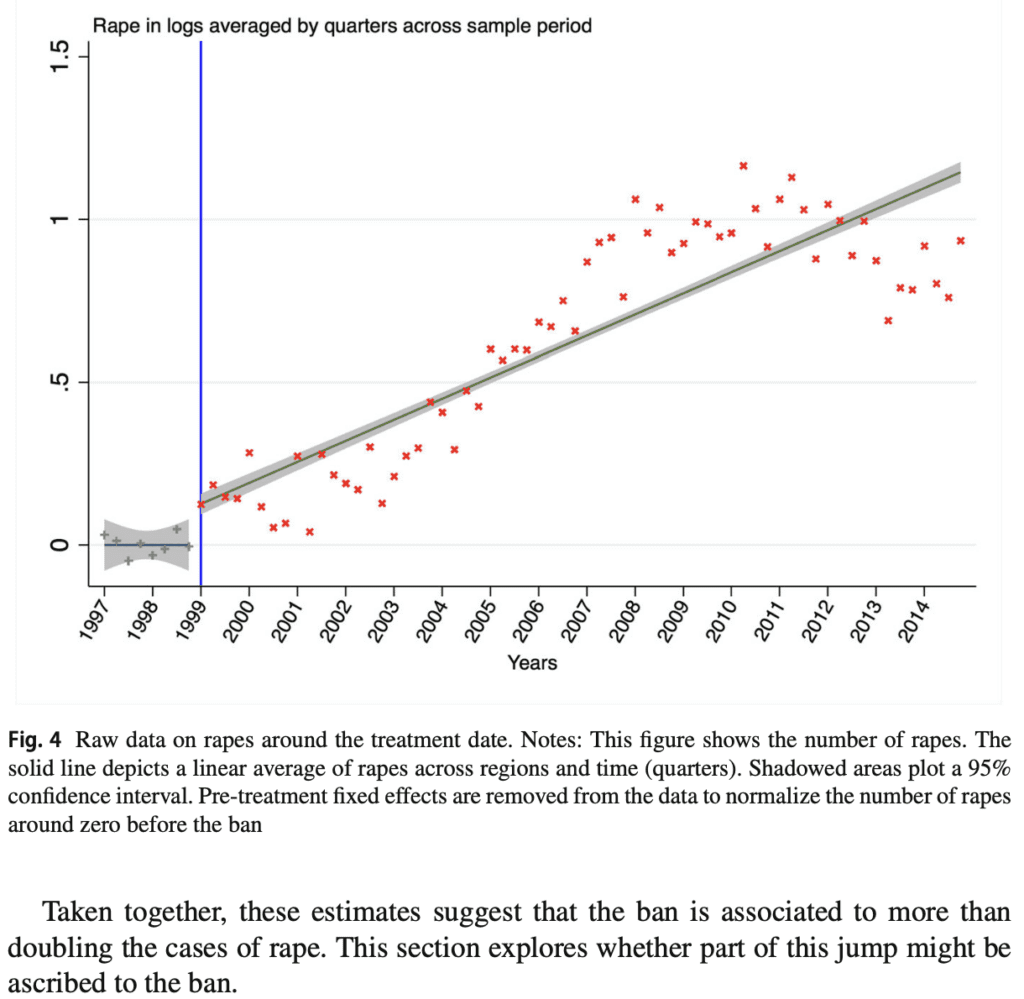

Several pieces of evidence find that rape more than doubled after the introduction of the ban. First, Table 1 finds that the average before the ban is around 6 rapes per region and month, while after the introduction is roughly 12. Second, Table 2 presents the results of the naive analysis of regressing rape on a binary variable taking value 0 before the ban and 1 after, controlling for year, month, and region fixed effects. Results show that the post ban period is associated with an increase of around 100% of cases of rape in logs and 125% of cases of rape in the inverse hyperbolic sine transformation (IHS, hereafter). Third, a simple descriptive exercise –plotting rape normalized before the ban around zero by removing pre-treatment fixed effects– encounters that rape boosted around 110% during the sample period (Fig. 4).

OK, the averages don’t really tell us anything much at all: they’re looking at data from 1997-2014, the policy change happened in 1999, in the midst of a slow increase, and most of the change happened after 2004, as is clearly shown in Folke’s graph. So Table 1 and Table 2 are pretty much irrelevant.

But what about Figure 4:

This looks pretty compelling, no?