I have a story for you about a success of preregistration. Not quite the sort of success that you might be expecting—not a scientific success—but a kind of success nonetheless.

It goes like this. An experiment was conducted. It was preregistered. The results section was written up in a way that reads as if the experiment worked as planned. But if you go back and forth between the results section and the preregistration plan, you realize that the purportedly successful results did not follow the preregistration plan. They’re just the usual story of fishing and forking paths and p-hacking. The preregistration plan was too vague to be useful, also the authors didn’t even bother to follow it—or, if they did follow it, they didn’t bother to write up the results of the preregistered analysis.

As I’ve said many times before, there’s no reason that preregistration should stop researchers from doing further analyses once they see their data. The problem in this case is that the published analysis was not well justified either from a statistical or a theoretical perspective, nor was it in the preregistration. Its only value appears to be as a way for the authors to spin a story around a collection of noisy p-values.

On the minus side, the paper was published, and nowhere in the paper does it say that the statistical evidence they offer from their study does not come from the preregistration. In the abstract, their study is described as “pre-registered,” which isn’t a lie—there’s a pregistration plan right there on the website—but it’s misleading, given that the preregistration does not line up with what’s in the paper.

On the plus side, outside readers such as ourselves can see the paper and the preregistrations and draw our own conclusions. It’s easier to see the problems with p-hacking and forking paths when the analysis choices are clearly not in the preregistration plan.

The paper

The Journal of Experimental Social Psychology recently published an article, “How pledges reduce dishonesty: The role of involvement and identification,” by Eyal Peer, Nina Mazar, Yuval Feldman, and Dan Ariely.

I had no idea that Ariely is still publishing papers on dishonesty! It says that data from this particular paper came from online experiments. Nothing involving insurance records or paper shredders or soup bowls or 80-pound rocks . . . It seems likely that, in this case, the experiments actually happened and that the datasets came from real people and have not been altered.

And the studies are preregistered, with the preregistration plans all available on the papers’ website.

I was curious about that. The paper had 4 studies. I just looked at the first one, which already took some effort on my part. The rest of you can feel free to look at Studies 2, 3, and 4.

The results section and the preregistration

From the published paper:

The first study examined the effects of four different honesty pledges that did or did not include a request for identification and asked for either low or high involvement in making the pledge (fully-crossed design), and compared them to two conditions without any pledge (Control and Self-Report).

There were six conditions: one control (with no possibility to cheat), a baseline treatment (possibility and motivation to cheat and no honesty pledge), and four different treatments with honesty pledges.

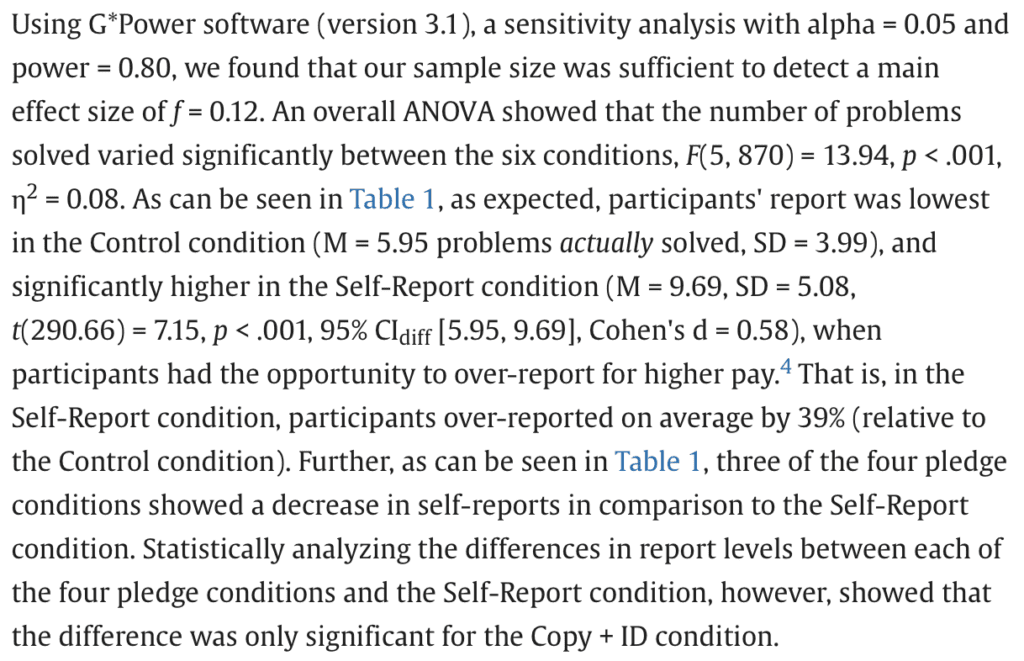

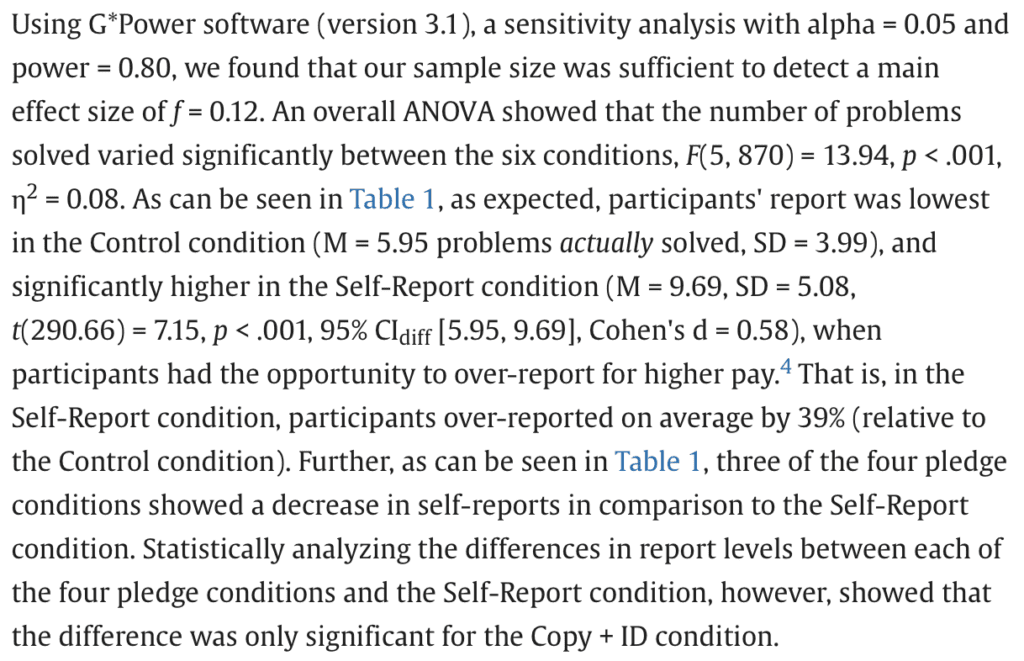

This is what they reported for their primary outcome:

And this is how they summarize in their discussion section:

Interesting, huh?

Now let’s look at the relevant section of the preregistration:

Compare that to what was done in the paper:

– They did the Anova, but that was not relevant to the claims in the paper. The Anova included the control condition, and nobody’s surprised that when you give people the opportunity and motivation to cheat, that some people will cheat. That was not the point of the paper. It’s fine to do the Anova; it’s just more of a manipulation check than anything else.

– There’s something in the preregistration about a “cheating gap” score, which I did not see in the paper. But if we define A to be the average outcome under the control, B to be the average outcome under the baseline treatment, and C, D, E, F to be the average under the other four treatments, then I think the preregistration is saying they’ll define the cheating gap as B-A, and the compare this to C-A, D-A, E-A, and F-A. This is mathematically the same as looking at C-B, D-B, E-B, and F-B, which is what they do in the paper.

– The article jumps back and forth between different statistical summaries: “three of the four pledge conditions showed a decrease in self-reports . . . the difference was only significant for the Copy + ID condition.” It’s not clear what to make of it. They’re using statistical significance as evidence in some way, but the preregistration plan does not make it clear what comparisons would be done, how many comparisons would be made, or how they would be summarized.

– The preregistration plan says, “We will replicate the ANOVAs with linear regressions with the Control condition or Self-Report conditions as baseline.” I didn’t see any linear regressions in the results for this experiment in the published paper.

– The preregistration plan says, “We will also examine differences in the distribution of the percent of problems reported as solved between conditions using Kolmogorov–Smirnov tests. If we find significant differences, we will also examine how the distributions differ, specifically focusing on the differences in the percent of “brazen” lies, which are defined as the percent of participants who cheated to a maximal, or close to a maximal, degree (i.e., reported more than 80% of problems solved). The differences on this measure will be tested using chi-square tests.” I didn’t see any of this in the paper either! Maybe this is fine, because doing all these tests doesn’t seem like a good analysis plan to me.

How do we think of all the analyses stated in the preregistration plan that were not in the paper? Since these analyses were preregistered, I can only assume the authors performed them. Maybe the results were not impressive and so they weren’t included. I don’t know; I didn’t see any discussion of this in the paper.

– The preregistration plan says, “Lastly, we will explore interactions effects between the condition and demographic variables such as age and gender using ANOVA and/or regressions.” They didn’t report any of that either! Also there’s the weird “and/or” in the preregistration, which gives the researchers some additional degrees of freedom.

Not a moral failure

I continue to emphasize that scientific problems do not necessarily correspond to moral problems. You can be a moral person and still do bad science (honesty and transparency are not enuf); to put it another way, if I say that you make a scientific error or are sloppy in your science, I’m not saying you’re a bad person.

For me to say someone’s a bad person just because they wrote a paper and didn’t follow their preregistration plan . . . that would be ridiculous! Over 99% of my published papers have no preregistration plans; and, those that do have such plans, I’m pretty sure we didn’t exactly follow them in our published papers. That’s fine. The reason I do preregistration is not to protect my p-values; it’s just part of a larger process of hypothesizing about possible outcomes and simulating data and analysis as a prelude to measurement and data collection.

I think what happened in the “How pledges reduce dishonesty” paper is that the preregistration was both too vague and too specific. Too vague in that it did not include simulation and analysis of fake data, nor did it include quantitative hypotheses about effects and the distributions of outcomes, nor did it include anything close to what the authors ended up actually doing to support the claims in their paper. Too specific in that it included a bunch of analyses that the authors then didn’t think were worth reporting.

But, remember, science is hard. Statistics is hard. Even what might seem like simple statistics is hard. One thing I like about doing simulation-based design and analysis before collecting any data is that it forces me to make some of the hard choices early. So, yeah, it’s hard, and it’s no moral criticism of the authors of the above-discussed paper that they botched this. We’re all still learning. At the same time, yeah, I don’t think their study offers any serious evidence for the claims being made in that paper; it looks like noise mining to me. Not a moral failing; still, bad science in there being no good links between theory, effect sized, data collection, and measurement, which, as is often the case, leads to super-noisy results that can be interpreted in all sorts of ways to fit just about any theory.

Possible positive outcomes for preregistration

I think preregistration is great; again, it’s a floor, not a ceiling, on the data processing and analyses that can be done.

Here are some possible benefits of preregistration:

1. Preregistration is a vehicle for getting you to think harder about your study. The need to simulate data and create a fake world forces you to make hard choices and consider what sorts of data you might expect to see.

2. Preregistration with fake-data simulation can make you decide to redesign a study, or to not do it at all, if it seems that it will be too noisy to be useful.

3. If you already have a great plan for a study, preregistration can allow the subsequent analysis to be bulletproof. No need to worry about concerns of p-hacking if your data coding and analysis decisions are preregistered—and this also holds for analyses that are not based on p-values or significance tests.

4. A preregistered replication can build confidence in a previous exploratory finding.

5. Conversely, a preregistered study can yield a null result, for example if it is designed to have a high statistical power but then does not yield statistically significant preregistered results. Failure is not always as exciting or informative as success—recall the expression “big if true“—but it ain’t nothing.

6. Similarly, a preregistered replication can yield a null result. Again, this can be a disappointment but still a step in scientific learning.

7. Once the data appears, and the preregistered analysis is done, if it’s unsuccessful, this can lead the authors to change their thinking and to write a paper explaining that they were wrong, or maybe just to publish a short note saying that the preregistered experiment did not go as expected.

8. If a preregistered analysis fails, but the authors still try to claim success using questionable post-hoc analysis, the journal reviewers can compare the manuscript to the preregistration, point out the problem, and require that the article be rewritten to admit the failure. Or, if the authors refuse to do that, the journal can reject the article as written.

9. Preregistration can be useful in post-publication review to build confidence in published paper by reassuring readers who might have been concerned about p-hacking and forking paths. Readers can compare the published paper to the preregistration and see that it’s all ok.

10. Or, if the paper doesn’t follow the preregistration plan, readers can see this too. Again, it’s not a bad thing at all for the paper to go beyond the preregistration plan. That’s part of good science, to learn new things from the data. The bad thing is when a non-preregistered analysis is presented as if it were the preregistered analysis. And the good thing is that the reader can read the documents and see that this happened. As we did here.

In the case of this recent dishonesty paper, preregistration did not give benefit 1, nor did it give benefit 2, nor did it give benefits 3, 4, 5, 6, 7, 8, or 9. But it did give benefit 10. Benefit 10 is unfortunately the least of all the positive outcomes of preregistration. But it ain’t nothing. So here we are. Thanks to preregistration, we now know that we don’t need to take seriously the claims made in the published paper, “How pledges reduce dishonesty: The role of involvement and identification.”

For example, you should feel free to accept that the authors offer no evidence for their claim that “effective pledges could allow policymakers to reduce monitoring and enforcement resources currently allocated for lengthy and costly checks and inspections (that also increase the time citizens and businesses must wait for responses) and instead focus their attention on more effective post-hoc audits. What is more, pledges could serve as market equalizers, allowing better competition between small businesses, who normally cannot afford long waiting times for permits and licenses, and larger businesses who can.”

Huh??? That would not follow from their experiments, even if the results had all gone as planned.

There’s also this funny bit at the end of the paper:

I just don’t know whether to believe this. Did they sign an honesty pledge?

Overkill?

OK, it’s 2024, and maybe this all feels like shooting a rabbit with a cannon. A paper by Dan Ariely on the topic of dishonesty, published in an Elsevier journal, purporting to provide “guidance to managers and policymakers” based on the results of an online math-puzzle game? Whaddya expect? This is who-cares research at best, in a subfield that is notorious for unreplicable research.

What happened was I got sucked in. I came across this paper, and my first reaction was surprise that Ariely was still collaborating with people working on this topic. I would’ve thought that the crashing-and-burning of his earlier work on dishonesty would’ve made him radioactive as a collaborator, at least in this subfield.

I took a quick look and saw that the studies were preregistered. Then I wanted to see exactly what that meant . . . and here we are.

Once I did the work, it made sense to write the post, as this is an example of something I’ve seen before: a disconnect between the preregistration and the analyses in the paper, and a lack of engagement in the paper with all the things in the preregistration that did not go as planned.

Again, this post should not be taken as any sort of opposition to preregistration, which in this case led to positive outcome #10 on the above list. The 10th-best outcome, but better than nothing, which is what we would’ve had in the absence of preregistration.

Baby steps.