Steve Ziliak wrote in:

I thought you might be interested in the following exchanges on randomized trials:

Here are a few exchanges on the economics and ethics of randomized controlled trials, reacting to my [Zilliak’s] study with Edward R. Teather-Posadas, “The Unprincipled Randomization Principle in Economics and Medicine”.

Our study is forthcoming in the Oxford Handbook of Professional Economic Ethics (OUP 2014, eds. George DeMartino and Deirdre N. McCloskey).

Reaction by Casey Mulligan, in The New York Times.

In the Financial Times, by Tim Harford aka “The Undercover Economist”.

Here is a reaction by Jed Friedman, a Senior Economist at the World Bank.

And here is our reply to Friedman.

In reply, I pointed to this article of mine on experimental reasoning in social science, where I discuss the following questions:

1. Why do I agree with the consensus characterization of randomized experimentation as a gold standard?

2. Given point 1 above, why does almost all my research use observational data?

Ziliak wrote back:

Your numbers 1 and 2 remind me of a mathematician-philosopher friend, Raymond Smullyan, a master of self-defeating statements.

I was very happy to be compared with the great Smullyan. Ziliak continued:

Raymond is awesome! I first got to know him in ’85/’86, when I was an undergrad at Indiana Bloomington. Smullyan was on the faculty of philosophy, math, and computer science, hired there by Doug Hofstadter if I correctly recall. But Ray was close to retirement (so to speak: he currently, at age 95, has at least four books in progress) and he’d walk the town of Bloomington, eating in diners, sitting under a flowering tree, or just walking and smiling like a living statue of Buddha. I started to talking to him at a diner we frequented.

More than 20 years later I got in touch with him and invited him to Roosevelt U in Chicago for several days of lecturing, piano playing, and magic tricks. He swept people off their feet and all the ladies – and taxi drivers, too – wanted to take a picture with him.

Here are some attempts at Smullyanism I [Ziliak] wrote to introduce him to the Roosevelt faculty (or rather reintroduce: he taught here in 1955):

1. I refuse to mention Raymond Smullyan’s name.

2. I can’t say anything more with this sentence.

3. Who can remain silent when talking about Raymond?

4. Introducing Raymond Smullyan, who needs no introduction.

Pas mal.

P.S. He’s Ziliak, not Zilliak. I just went through and corrected the spelling.

Wow, I had no idea Smullyan wrote about chess — I figured it was Andrew’s usual trick of one-level removed and there was some chess master named “Smullyan”. I picked up Smullyan’s books on logic puzzles (and even one on logic) in high school and starting college in the early 1980s — right after reading Hofstadter’s Goedel, Escher, Bach. They’re some of the few paper products I kept (Smullyan’s — don’t know if I kept Hofstadter — I found it annoying when I tried to read it many hears later). Smullyan’s right on the shelf next to Martin Gardner and Flatland.

Alas, I still know more about logic and set theory than I do about probability or stats, so I’ll leave the serious answers to this post to those more knowledgeable.

Bob: I really enjoyed Smullyan’s What Is the Name of This Book?: The Riddle of Dracula and Other Logical Puzzles – my daughter was just the right age to enjoy reading it and we read most of it together.

This might provide some serious content on the logic of randomisation (most university library should have available online).

The Collected Papers of Charles Sanders Peirce. Electronic edition.Volume 1: Principles of Philosophy

Transcribed from: Peirce, Charles Sanders, (1839-1914) The collected papers of Charles Sanders Peirce Cambridge, MA : Harvard University Press, 1931-1935 Volumes 1-6 edited by Charles Hartshorne and Paul Weiss.

Section XVI.

§16. Reasoning from Samples

Main point is “That this [randomisation] does justify induction is a mathematical proposition beyond dispute.”

Related to Ziliak’s thoughts on the ethics of RCT, Steven Epstein’s book, “Impure Science” describes the interactions between ACT-UP activists and the FDA regarding using placebos in the early clinical trials of antiviral drugs for HIV infections. For people who don’t want to read the whole book, Harry Collins and Trevor Pinch provide a nice summary of it in a chapter of their book, “The Golem at Large.”

The randomized controlled trials of AZT in the mid to late 1980s were being undermined by such things as patients who would swap pills with each other to ensure that everyone (even the patients in the placebo arm) would get some of the actual AZT, and who would take bootleg drugs without telling the researchers. Patients would also test their pills to see whether they were getting AZT or placeobs (this was easy: a patient could open the capsule and taste the contents: AZT was very bitter and the placebos were sweet). Epstein described how giving ACT-UP activists a place at the table and re-designing the trials to eliminate the placebo branch, produced better compliance with protocols and more honest self-reports from participants in the trial.

I just Friedman’s blog entry and then Zilliak et al’s reply to him, back to back, and I found the latter just appalling in tone and content.

Friedman says “Let’s leave aside the particular question of whether you or I believe the eyeglass study is ethical or not…” in the explicit, clear, attempt to try to find useful common and practical ground (“Framing the discussion in this fashion shifts the point of contention in a productive direction…”). IMO there’s no fair reading of this that misses this specific and useful rhetorical purpose, or impugns a general lack of common morality to Friedman (yet we get: “…for some reason Friedman—joined by other randomizing economists–wants to ‘leave aside’ the ethics question.” and “…perhaps Friedman believes … that the ends justify the means.”)

Friedman’s joke in the comment section about one anonymous commenter’s pseudonym justifies nearly a full paragraph of snark.

The rest is as bad.

Yeah, I did not find Zilliak’s response an example of enquirer trying to get less wrong from comments of others…

And Gosset/Student was far from a winner in his exchange with Fisher re: alternation versus randomization.

Admirateur de mon ami est mon ami?

I had understood his comments on Gosset v Fisher in various sources to be about the need for balance in experimental design, rather than alternation per se – have I got this wrong? This letter is an interesting short read on the history of alternation versus randomization: http://www.bmj.com/content/319/7221/1372

I haven’t found his comments on balance persuasive – randomization can incorporate minimization and stratification etc and hence the possibility of chance imbalance is not in itself an argument against RCTs.

PS by ‘his’ above in second para I mean Ziliak.

“appalling in tone and content”…. I couldn’t get past the tone.

Yes, Ziliak’s tone is bizarre. His harping on the Chinese eyeglasses point is bizarre. He seems to miss that his problem with that study isn’t with the existence of a control group, but the need to run the study at all, since of course the benefits of eyeglasses are just so self-evidently above $15 per person. The fact that he attributes the ethical issue to the control group is extremely disingenuous.

It’s not new for him to hold such a patronizing tone–his and McCloskey’s “The Cult of Statistical Significance” is one of the most patronizing pieces of work I’ve seen.

Yes, attack based on the commenter’s reply seemed particularly dense. Does Zilliak have no sense of humor, or was he one-upping Friedman with some ironical meta humor?

Also, journal editors want you to chop balance tables in the published versions. He should look at more working papers.

> Also, journal editors want you to chop balance tables in the published version

Do you know if this is true of AER? Zilliak asserts that part of his actual research findings for a handbook of ethics (i.e. something of potential consequence, not the weird blog post) is that “The fact is that none of the AER papers (0% [bxg: thanks very much!]) offered data showing the extent of balance or imbalance of their experimental design and results.” It would put kind of a different light on things if AER had a policy of cutting such details! I have no idea if they do; does anyone? But would Zilliak even ask or care anyway? (No idea, but his blog post gives _me_ a rather strong prior.)

The reply seemed really strange and uncomfortable. I don’t know much about this Chinese eyeglasses study but I doubt that testing the usefulness of eyeglasses was the goal, I’m pretty sure it was testing the usefulness of providing glasses at no cost. I have read the abstract and in fact it points out some interesting results such as that 1/3 of families turned down the offer of free classes, thus they looked at both the impact of the “offer” and of the actual “wearing” of glasses

“raising the question of why such investments are not made by most families. We find that girls are more likely to refuse free eyeglasses, and that lack of parental awareness of vision problems, mothers’ education, and economic factors (expenditures per capita and price) significantly affect whether children wear eyeglasses in the absence of the intervention. ”

As was described in the various discussions it seems as though some people figure out how to get eyeglasses for the children in other ways. On the other hand, with an IRB hat on I’d rather see a control where they were either provided something else worth $15 or $15 in cash or maybe a menu of options. Or you could offer all the controls the eyeglasses at the end of the trial. I’m always extremely suspicious of claims that something (in this case not eyeglasses but specifically a program that offers free eyeglasses) is self evidently beneficial. If 1/3 of people turn the program down, maybe it’s not so great, I don’t know.

Lots of past research on social interventions shows that things that seem to be Self Evident Good Things That Could Not Possibly Go Wrong (TM) turn out not necessarily to be so. The whole problem of what happens on the ground when social programs evaluated in RCT model are in a second setting or scaled up would seem to me possibly more important both ethically and financially when it comes to criticizing that model. Real world implementations almost never have the attention to detail and careful supervision to ensure compliance with protocols that the RCTs have.

Agreed. The problems with free bednets should be enough evidence that we can’t just throw things at people in developing countries and expect that to be effective, even if it’s just obvious to a professor in the middle of the U.S.

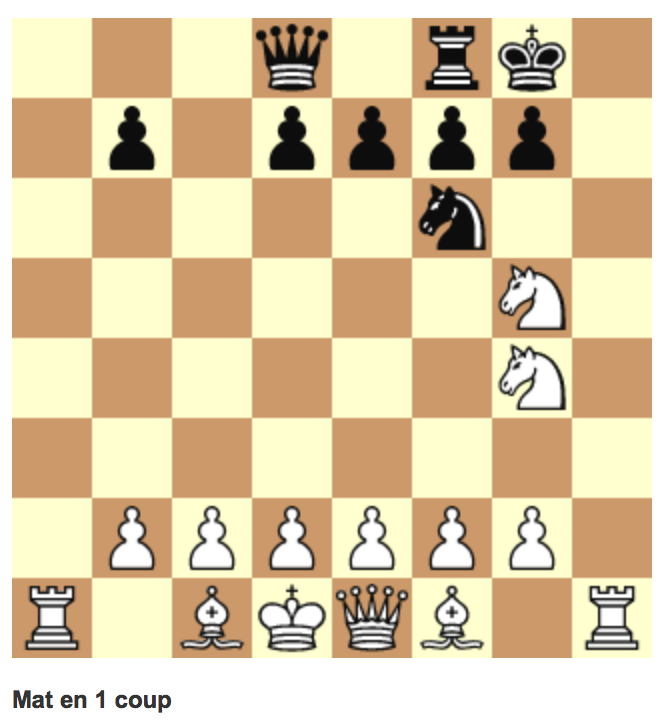

… can only be mate in 1 move if the board is turned around, so all the pawns are on the 7th rank rather than the 2nd. At least that’s my take. Then Nxf6 – which is ‘really’ Nxc3, is checkmate. No doubt something about lateral thinking??

Aha! So that’s it! That’s been bugging me since yesterday.

Did you check that there have been just the right number of pawn captures to get the all the pawns to the seventh rank in that configuration? (I’m sure Smullyan did.)

Look at the positions of the white king and queen, and all will be clear.

Thanks — this made my day!

Thanks for the good post Andy. O’Rourke and others assert that Gosset aka Student lost out to Fisher. Not so, as I have shown in a number of articles and book chapters. See for example my article in the Review of Behavioral Economics I (1, Jan. 2014): http://www.nowpublishers.com/articles/review-of-behavioral-economics/RBE-0008

In truth, Egon Pearson, Jerzy Neyman, E.S. Beaven, and Harold Jeffreys were persuaded by Gosset’s balance over Fisher’s random, and started saying so as early as 1935. So too was the Guinness Board of Directors, to their (and our beer drinking) profit. Today’s randomization school has not considered these telling facts. And over at the World Bank, other commentators might wish to reconsider, Friedman agreed with Ziliak and Teather-Posadas that repeated, balanced, stratified experiments, designed and interpreted with an economic approach (such as loss functions), dominate unrepeated, completely randomized, and statistically significant experiments. That is, today’s mistaken — and costly — fashion.

Steve

O’Rourke cites Peirce on randomization. But Peirce, like Fisher years after him, did not do experiments with an economic motive. Gosset did, and that makes all the difference. See, for example, Gosset’s crushing refutation of Fisher (Student (1938), Biometrika), and Egon Pearson’s economic, logical, and statistical power-function confirmations of Gosset’s balanced designs (Pearson (1938), Biometrika).

Regarding comments made on the Chinese Eyeglass Experiment, there is unthinking emotion and unscientific defensiveness instead of economics and logic and facts and ethics, the points I raise in my paper with Teather-Posadas.

Steve

Indeed, lack of economic motive:

Peirce’s application to the Carnegie Institution:

Consequently, to discover is simply to expedite an event that would occur sooner or later, if we had not troubled ourselves to make the discovery. Consequently, the art of discovery is purely a question of economics. The economics of research is, so far as logic is concerned, the leading doctrine with reference to the art of discovery.

You may (or may not) wish to read Stephen Senn’s take on the Gosset/Fisher debate.

The Ziliak and Teather-Posadas paper is stretching things a bit in claiming that “a common defect of statistical studies and especially of randomized controlled trials” is Simpson’s paradox. Why is this an especial fault of RCTs? Moreover, their hypothetical example is completely rigged: they point out that Simpson’s paradox would arise if 3 times as many men as women ended up in the treatment group (and vice versa for the control group) and if men had a much different baseline level of performance than women.

Well, yes indeed. But a 3x gender imbalance in each group is astronomically unlikely. This isn’t an argument against randomization, it’s at most an argument for stratification before randomization.

Stuart:

If you are not already aware Senn’s Seven myths of randomisation in clinical trials is a nice read about what you raised.

http://onlinelibrary.wiley.com/doi/10.1002/sim.5713/abstract

Pingback: More on those randomistas « Statistical Modeling, Causal Inference, and Social Science Statistical Modeling, Causal Inference, and Social Science

Thanks, O’Rourke, for the reply. I debated Stephen Senn in the pages of The Lancet, in October 2010, on the Gosset-Fisher relationship. Senn replied to my July 2010 Lancet essay, “The Validus Medicus and a New Gold Standard”.

Steve