Completely uncritical press coverage of a speculative analysis.

But, hey, it was published in the prestigious Proceedings of the National Academy of Sciences (PPNAS)! What could possibly go wrong?

Here’s what Erik Larsen writes:

In a paper published in the Proceedings of the National Academy of Sciences, People search for meaning when they approach a new decade in chronological age, Adam L. Alter and Hal E. Hershfield conclude that “adults undertake a search for existential meaning when they approach a new decade in age (e.g., at ages 29, 39, 49, etc.) or imagine entering a new epoch, which leads them to behave in ways that suggest an ongoing or failed search for meaning (e.g., by exercising more vigorously, seeking extramarital affairs, or choosing to end their lives)”. Across six studies the authors find significant effects of being a so-called 9-ender on a variety of measures related to meaning searching activities.

Larsen links to news articles in the New Republic, Washington Huffington Post, Salon, Pacific Standard, ABC News, and the British Psychological Society, all of which are entirely uncritical reports, and continues:

I [Larsen] show that each of the six studies in the paper consist of at least one crucial deficiency hindering meaningful inferences. In several of the studies the results stems from the fact that the end digits are not comparable as 9, for example, is more likely to be found among younger age decades as the age range in all the studies is from 25 to 65. In other words, if people are more likely to engage in an activity at a younger age compared to later in life, higher end digits are more likely to measure such differences compared to lower end digits. When controlling properly for age the differences reported in some of the studies fails to reach statistical significance. In other studies, results were questionable due to empirical shortcomings.

Larsen conveniently made the data accessible via R code in this Github repository.

You can follow the link for all of Larsen’s comments but here are a few:

In Study 1, the authors use data from the World Values Survey. They conclude that “9-enders reported questioning the meaning or purpose of life more than respondents whose ages ended in any other digit”. However, if one take age decades into consideration, the 9-ender effects fails to reach statistical significance (p=0.71) despite the sample size of 42.063.

In the replication material, some respondents from the World Values Survey are excluded. In the full data set (obtained at http://www.worldvaluessurvey.org/WVSDocumentationWV6.jsp), there are 56,145 respondents in the age range 25-64. The difference between 9-enders and the other respondents on the Meaning variable is 0.001 and is non-significant . . .

In Study 2, age decades are less relevant due to the experimental design. Here the authors find significant effects of the experimental stimuli, and the effects seem robust. However, no randomization tests are reported. One sees that the experimental group differ systematically from both the baseline and control group on pre-randomization measured variables. People in the experimental group are, for example, on average over 6 years younger than the baseline group (p<0.001) and have a higher distance to a new decade than the control group (p=0.02), questioning the possibility to draw design-based inferences. . . . In Study 3, the authors argue that 9-enders are more likely to seek extramarital affairs. What did the authors do here? They "categorized 8,077,820 male users, aged between 25 and 64 years, according to the final digit of their ages. There were 952,176 9-enders registered on the site". Before looking at the data, one can ask two questions. First, whether those aged 30 are more likely to report the age of 29 and so on. The authors argue that this is not the case, but I am not convinced. Second, and something the authors doesn't discuss, whether age is a good proxy for the age users had when they signed up at the specific site (unless everybody signed up before in the months before November 2013). . . . In Study 4, the authors look at suicide victims across the United States between 2000 and 2011 and show that 9-enders are more likely to commit suicide. Interestingly, the zero-order correlation between suicide rate and 9-enders is non-significant. In the model conducted by the authors (which shows a significant result), when controlling properly for age, the effect of 9-enders fails to reach statistical significance (p=0.13). In Study 5, the authors show that runners who completed marathons faster at age 29 or 39. However, simple tests show that there is no statistical evidence that people run faster when they are 29 compared to when they are 30 (p=0.2549) or 31 (p= 0.8908) and the same is the case for when they are 39 compared to 40 (p=0.9285) and 41 (p=0.5254). Hence, there is no robust evidence that it is the 9-ender effect which drives the results reported by the authors. . . .

To relay these comments is not to say there is nothing going on: people are aware of their ages and it is reasonable to suppose that they might behave differently based on this knowledge. But I think that, as scientists, we’d be better off reporting what we actually see rather than trying to cram everything into a single catchy storyline.

Of all the news articles, I think the best was this one, by Melissa Dahl. The article was not perfect—Dahl, like everybody else who reported on this article in the news media, was entirely unskeptical—but she made the good call of including a bunch of graphs, which show visually how small any effects are here. The only things that jump out at all are the increased number of people on the cheating website who say they’re 44, 48, or 49—but, as Larsen says, this could easily be people lying about their age (it would seem like almost a requirement, that if you’re posting on a cheaters site, that you round down your age by a few years)—and the high number of first-time marathoners at 29, 32, 35, 42, and 49. Here’s Dahl’s summary:

These findings suggest that in the year before entering a new decade of life, we’re “particularly preoccupied with aging and meaningfulness, which is linked to a rise in behaviors that suggest a search for or crisis of meaning,” as Hershfield and Alter write.

Which just seems ridiculous to me. From cheaters lying about their ages and people more likely to do their first marathon at ages 29, 32, 35, 42, and 49, we get “particularly preoccupied with aging and meaningfulness”??? Sorry, no.

But full credit for displaying the graphs. Again, I’m not trying to single out Dahl here—indeed, she did better than all the others by including the graphs, it’s just too bad she didn’t take the next step of noticing how little was going on.

One problem, I assume, is the prestige of the PPNAS, which could distract a reporter from his or her more skeptical instincts.

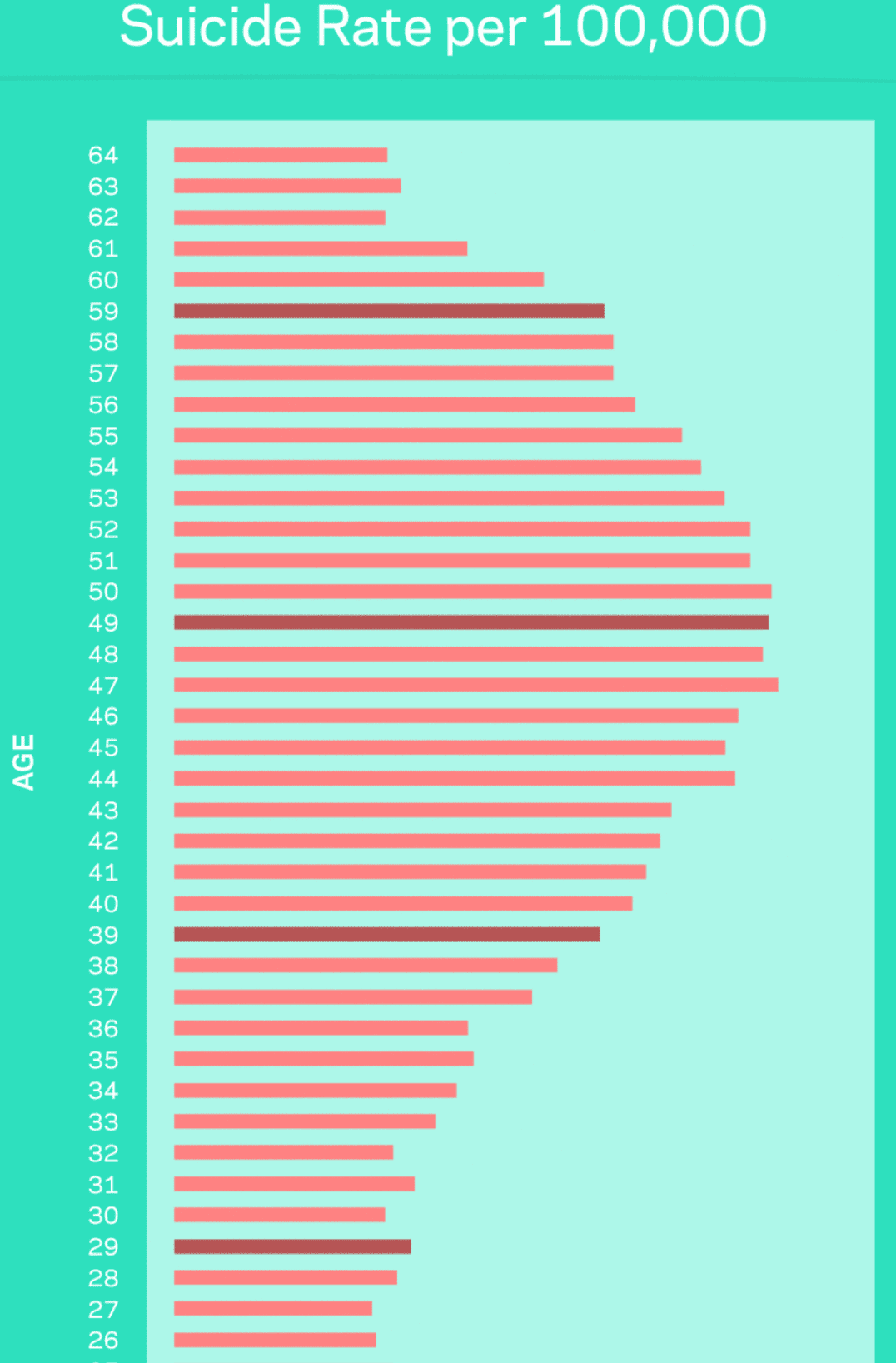

Here, by the way, is that graph of suicide rates that appeared in Dahl’s news article:

According to the graph, more people actually commit suicide at 40 than at 39, and more do it at 50 than at 49, while more people kill themselves at 58 than 59. So, no, don’t call the suicide hotline just yet.

P.S. To get an image for this post, I started by googling “hype cycle” but all I got was this sort of thing:

All these are images of unidirectional curves; none are cycles! Then I went on wikipedia and found that “hype cycle” is “a branded graphical tool.” How tacky. If you’re going to call something a cycle, you gotta make it return to its starting point!

The hype cycle

The “cycle” of which I speak goes something like this:

1. Researcher is deciding what to work on.

2. He or she hears about some flashy paper that was published in PNAS under the auspices of Susan Fiske, maybe something like himmicanes and hurricanes. The researcher, seeing this, sees that you can get fame and science chits by running a catchy psych experiment or statistical analysis and giving it a broad interpretation with big social implications.

3. The analysis is done, it’s submitted to PNAS.

4. PNAS sends it to Susan Fiske, who approves it.

5. The paper appears and gets huge exposure and uncritical media attention, both in general and (I’m sorry to say) within the field of psychology.

Return to 1: Another researcher is deciding what to work on. . . .

That’s the hype cycle: The press attention and prestige publication motivates more work of this kind.

P.S. Let me emphasize: I’m not opposed to this sort of work. I think the age analysis is clever (and I mean that in a good way), and I think it’s great that this sort of thing is being done. But, please, can we chill on the interpretations? And, journalists (both in general and within our scientific societies), please report this with a bit of skepticism? I’m not saying you need to quote an “opponent” of the study, just don’t immediately jump to the idea that the claims are generally valid, just because they happened to appear in a top journal.

Remember, PNAS published the notorious “himmicanes and hurricanes” study.

Remember, the Lancet published the notorious Iraq survey.

Remember, Psychological Science published . . . ummm, I think you know where I’m going here.

Reporting with skepticism does not require “debunking.” It’s just a matter of being open-minded about the possibility that claimed results to not really generalize to the larger populations or questions of interest, in the above case it would imply questioning a claim such as, “We know that the end of a perceived era prompts us to make big life decisions,” which is hardly implied by some data that a bunch of 42-year-olds and 49-year-olds are running marathons.

Unfortunately, I think journalists raising their default skepticism level toward apparently data-based findings would result in some negative side effects. First, false equivalence (e.g. quotes from global warming deniers for balance) would become more common because journalists would be less inclined to regard anything as close to settled fact. Second, opinions of blowhard pundits of the David Brooks variety will get wider distribution even when they conflict with political science or economics. Journalists probably feel like they’re getting conflicting messages: 1) Ignore pundits, trust Nate Silver and data and science and 2) Don’t believe the data hype. (1) and (2) are both good advice but I can imagine that it’s hard for a journalist to know when to apply which.

Z:

Good points. I guess we could start with rules like, “Don’t trust things published in PPNAS or Psychological Science,” but that would create other problems. So, yeah, I don’t really know what to do.

I first started getting interested in this problem of uncritical reporting of speculative statistical claims after following up on that beauty-and-sex-ratio paper, several years ago. That was a paper that was uncritically promoted on the Freakonomics blog. At one point I gave a talk at the National Association of Science Writers conference and I described the situation as pretty scary. On one hand, the paper in question was pretty much as bad as anything you’ll ever see, it was entirely drawing conclusions from noise. On the other hand, it appeared in Freakonomics, and, as I told the science writers, “If Steve Levitt can get fooled by this stuff, what chance to you have of seeing through it?” It’s easy to say that science writers should just think harder about the reasonableness of things being claimed, but I take your point that an empty skepticism wouldn’t be much better (indeed, it could be worse) than an empty credulity.

Very interesting post.

My training (in England – which may be relevant – we’re pretty cynical to begin with) was always to be extremely skeptical of anything and everything and to really need an accumulation of evidence before I would start to change my mind. This way of thinking can be hazardous but I feel that it is a better default position than to assume that just because a paper has passed a peer-review that it is accurate. I feel like there has been a trend over the last decade for there to be less and less skepticism among researchers when reading other people’s work (never mind journalists). The reasons for this are multitudinous; your main point regarding ‘hype’ and people studying ‘catchy/sexy’ topics is one, the other major one being the need to be continually publishing and the shortcuts in quality that that engenders. A danger of this is that many people are following research lines whose foundations are essentially made out of flimsy sandcastles.

A simple rule of thumb like “a single study is very unlikely be conclusive” would weed about most of the junk while not encouraging journalists to reject the foundations of science.

“it’s hard for a journalist to know when to apply which”

It really isn’t hard.

1) Was there a *precise* (not “some correlation” or “one average is higher than the other”) prediction made beforehand that is consistent with the data?

2) Does this data seem to rule out some commonly held belief?

In 99% of cases the answers to both will be no. Therefore the apparent effect/relationship may be notable on its own but all explanations are speculative. Essentially we have preliminary data.

There may be non-statistical cues which could alert the science journalists. For example, is the argument as a whole coherent and free of circular logic? Do the authors address alternative explanations for the data? Or a sampling decision taken for a dubious reason, such as initially only focusing on male subjects wrt cheating because it is generally accepted that adultery (and btw purchase of red sports cars) as a response to a mid-life crisis is more prevalent among males.

Robert:

It’s tough, though. The PPNAS paper discussed in my post is not so bad in some ways: yes, the data analyses are flawed, but nothing so bad as in the himmicanes paper. And in this case they were dealing with entire populations so sampling wasn’t such an issue. The problem was more in the over-interpretation.

Fair enough.

I guess I was trying to make the case that questioning whether clusters of suicides or marathons (whether they actually exist or not) is good evidence that people are more introspective at those ages doesn’t necessarily require formal statistical training and therefore a journalist is not barred from posing that question due to education – even though they might be (or at least feel) unable to question the initial data analysis.

Hershfeld observed in the ABC write-up that the differences are not explainable by actual life changes, such as marriage (“it’s not like you got married”) – but here in Australia 29 is the median age for males to marry (28 in the US according to Google). Not in itself evidence of anything, but plausibly enough to plant the seeds for discussion of alternative interpretations.

I assume the first thing many journalists see is the over-the-top press release from the journal or the university. I bet they do not urge appropriate skepticism.

Ding ding. The thing-in-the-middle-that-spins of the hype machine is university PR.

+1. And yet I so rarely come across Professors who criticize their own university’s PR team. At least with the same vigor & rancor they use when criticizing other parties.

Rahul:

A few years ago I was really mad at my university’s PR team. But I was mad at them for . . . not promoting my work enough!

My old university had me read the PR report before it went out, and I toned a couple of these down. I also got one from Psych Sci, but that one was not OTT (it was on police identification procedures, so not as sexy as a paper on being 49 … which I am today so was glad reading that I am not necessarily doomed this year).

Hey, what do you know. http://www.bmj.com/content/349/bmj.g7015

Once upon a time scientists thought it was unprofessional bad taste to talk to the press. Nowadays it is encouraged.

To parody the attitude at some universities:

“I was the first to sequence DNA”, “Who cares”

“I authored a study on how manatees always face magnetic north when urinating. It was featured prominently in the NYT!” “Whoa! You totally rock!”

Andrew,

It might be worth sending your post to some of the journalists to see what they say. I suspect that no one will hold them to account for passing on claims that survived peer review in a top journal (or maybe not a top journal even) that are later discredited.

On the other hand, they may put their butts on the line if they question something put out by a top journal. Many people may even perceive doing this as being unscientific. There must be some reward for being a early questioner of something that is later discredited but maybe these rewards are insufficient to stimulate skepticism in the first place.

Can anyone come up with examples of journalists who are appropriately skeptical from the get go and later seem to reap rewards for having taken this posture?

Mike:

There are some science or business journalists who are (a) known for being skeptical about hype and (b) have achieved some recognition. Examples include Sharon Begley and Felix Salmon.

Not one of the media outlets Erik Gahner mentions that covered the story is what I would regard as a reliable source of information. I imagine that most good journalists just ignored it. (And back to Ed Yong’s ‘Every scientists-versus-journalists debate ever, in one diagram’).

Richard:

Larsen links to the New Republic, Washington Post, Salon, Pacific Standard, ABC News, and the British Psychological Society. OK, sure, the New Republic is notorious for its contrarianism, and I guess Salon will publish pretty much about anything. Pacific Standard, I don’t know about, and the BPS has an incentive to publish uncritical reports of psychology studies. But the Washington Post and ABC are reputable news sources, no?

Where’s the WaPo link? Yeah, they should be more reputable. I think it says HuffPo, not WaPo though? I give you the ABC, probably. (I never read it – but I am in the UK).

Oh, you’re right, I mistyped it. It was HuffPo, not WashPo. I take it all back.

Pretty embarrassing, especially considering that I write for the Washington Post myself!

Kudos to Dahl for showing the graphs. Note that the comments on her post are mostly showing at least some skepticism.

Similar issues (uncritical use of data, sweeping conclusions) were present in another paper by Hershfield, which was published in… Psychological Science (see http://pss.sagepub.com/content/25/1/152.long).

Some of these issues are discussed in a Commentary published in Frontiers: http://journal.frontiersin.org/Journal/10.3389/fpsyg.2014.01110/full

The original article got coverage in the Huffington Post (http://www.huffingtonpost.com/hal-e-hershfield/to-change-environmental-behavior_b_3845707.html), on NPR (http://www.npr.org/2013/11/26/247297844/why-countries-invest-differently-in-environmental-issues), and on Science Daily (http://www.sciencedaily.com/releases/2013/12/131202082648.htm).

“Overall, the researchers’ findings can be explained by Gott’s principle, a physics principle which holds that the best estimate of a given entity’s remaining duration is simply the length of time that it has already been in existence. ” Oh my.

Elin:

It’s funny how the fallacies we’ve discussed on this blog keep reappearing in other places. I half-expect that paper to mention the “generalized Trivers-Willard hypothesis.” But, I would say of this paper (on ages of countries) what I would say of the other paper (on ages of people): it’s an interesting idea and I’m glad they did their analyses and wrote their paper, I just wish their findings could be presented without the hype and without the attitude of certainty.

I completely agree about the hype problem, and we should all be grateful that you’ve taken your time to blog about this. Scientific publications will always need some form of marketing (e.g. “Why is this interesting?”). The country age idea is interesting and well-explained. However, there is a discrepancy between the marketing and the care the authors have taken to produce reliable results. They use GDP rather than GDP per capita when controlling for economic development. In the mediation analysis, they forgot to include the control variables mentioned in the paper. Most worryingly though, the 2 subscales measuring environmental performance (the DV) correlate negatively. Countries performing well on the first subscale are likely to perform poorly on the second subscale, and vice versa. Not everyone likes statistics, but these are issues that could have been picked up. So, I think the problem is the discrepancy between the quality of the methods/analyses on the one hand, and the hype/marketing on the other hand.

Anyway, thanks for bringing this up.

It’s meaningless – insignificant – dreck like this that makes me happier to be a 39-year-old physicist and not, say, anyone involved with psychology studies.

“I think the age analysis is clever (and I mean that in a good way)” – but you can’t separate the people who thought of doing the analysis, and performed it, from the people who misreported its findings as being of any use or interest – given that they don’t actually see a clear and meaningful effect – and then set out to sell their inflated non-findings to the media.

I.e. it may be clever in a good way, but it’s a lot more clever in a bad way.

The problem is that, in the absence of controversy or noteworthy opposition to a study coming from some third party, journalist skepticism will simply manifest as non-coverage. Nobody’s going to pick up a university press release and cover the study within the frame of “but this might not be true,” for a variety of reasons that include journalistic norms of what constitutes neutrality, and lack of expertise. It’s the news cycle version of the file drawer problem.

Pingback: A week of links | EVOLVING ECONOMICS

It would be interesting to study the ages of fictional characters. The modal age of movie heroes, for example, appears to be 35.

Pingback: Links 11/28/14 | Mike the Mad Biologist

Pingback: I’ve Got Your Missing Links Right Here (29 November 2014) – Phenomena: Not Exactly Rocket Science

Perhaps you’ve seen this, but, another kind of cycle:

http://www.phdcomics.com/comics/archive.php?comicid=1174

Anon:

Yes, but it’s even worse than that, in that the original correlation that gets everything started could well be a correlation in a sample that does not reflect a correlation in the underlying population. Remember: correlation does not even imply correlation.

Pingback: Scientists are furious after a famous psychologist accused her peers of 'methodological terrorism' | Business Insider

Pingback: After Peptidegate, a proposed new slogan for PPNAS. And, as a bonus, a fun little graphics project. - Statistical Modeling, Causal Inference, and Social Science

Pingback: BREAKING . . . . . . . PNAS updates its slogan! - Statistical Modeling, Causal Inference, and Social Science

Pingback: Driving a stake through that ages-ending-in-9 paper - Statistical Modeling, Causal Inference, and Social Science

Pingback: Sunday assorted links - Marginal REVOLUTION

Pingback: Reasons for an optimistic take on science: there are not growing problems with research and publication practices. Rather, there have been, and continue to be, huge problems with research and publication practices, but we've made progress in recogniz